Bibliometrics is a branch of library and information science that deals with the quantitative analysis of scientific publications. It involves the study of publication patterns, citation patterns, and authorship patterns in order to provide a better understanding of the scientific literature. Bibliometrics has become an important tool for evaluating research output and measuring the impact of research. Bibliometric practices and activities are essential for researchers, librarians, and other stakeholders in the scientific community. In this article, we will provide a comprehensive overview of bibliometric practices and activities

Bibliometric Practices and Activities:

Bibliometric practices and activities can be broadly classified into three categories: bibliometric analysis, bibliometric indicators, and bibliometric tools.

- Bibliometric Analysis: Bibliometric analysis involves the use of quantitative techniques to analyze the characteristics of scientific publications. The most commonly used techniques are citation analysis, co-citation analysis, and bibliographic coupling analysis.

Citation analysis involves the counting and analysis of citations that a publication receives from other publications. This technique is used to determine the impact of a publication and the influence of an author or a research group. The number of citations received by a publication is considered an indicator of its quality and importance.

Co-citation analysis involves the identification of publications that are cited together by other publications. This technique is used to identify the intellectual structure of a field and the relationships between publications.

Bibliographic coupling analysis involves the identification of publications that share common references. This technique is used to identify the relationships between publications and to identify the research areas that are most closely related.

- Bibliometric Indicators: Bibliometric indicators are quantitative measures that are used to evaluate the quality and impact of scientific publications. The most commonly used bibliometric indicators are the impact factor, h-index, and g-index.

The impact factor is a measure of the average number of citations received by a publication in a particular year. It is calculated by dividing the total number of citations received by the publication in the previous two years by the total number of publications in the same period. The impact factor is widely used to evaluate the quality and importance of scientific journals.

The h-index is a measure of an author’s productivity and impact. It is calculated by counting the number of publications that an author has written that have received at least h citations. The h-index is used to evaluate the productivity and impact of individual authors.

The g-index is a measure of an author’s productivity and impact that takes into account the distribution of citations across their publications. It is calculated by sorting an author’s publications by the number of citations received and identifying the point at which the sum of the citations equals the square of the number of publications. The g-index is used to evaluate the productivity and impact of individual authors.

- Bibliometric Tools: Bibliometric tools are software programs that are used to collect and analyze bibliometric data. The most commonly used bibliometric tools are Web of Science, Scopus, and Google Scholar.

Web of Science is a bibliographic database that provides access to the world’s leading research literature. It is widely used to perform bibliometric analysis and to identify bibliometric indicators.

Scopus is a bibliographic database that provides access to scientific literature from all disciplines. It is widely used to perform bibliometric analysis and to identify bibliometric indicators.

Google Scholar is a web-based search engine that provides access to scientific literature from all disciplines. It is widely used to perform bibliometric analysis and to identify bibliometric indicators.

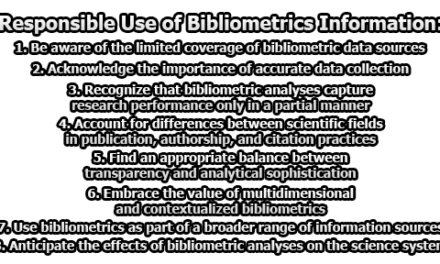

- Ethical Considerations: Bibliometric practices and activities raise a number of ethical considerations. One of the most important ethical considerations is the use of bibliometric indicators to evaluate the quality and impact of scientific publications and individual authors. Critics argue that bibliometric indicators can be easily manipulated and do not provide a complete picture of the quality and impact of scientific research.

Another ethical consideration is the use of bibliometric data to make decisions about funding, promotion, and tenure. Critics argue that bibliometric data should not be used as the sole criterion for making these decisions and that other factors such as the quality of the research, the impact on society, and the potential for future impact should also be taken into consideration.

Moreover, bibliometric analysis can also lead to biases and discrimination against certain research areas, institutions, and authors. For instance, research areas that are not well-established or do not generate a lot of citations may be overlooked or undervalued, leading to a lack of funding and resources. Similarly, institutions and authors from developing countries or non-English speaking countries may be at a disadvantage due to language barriers and limited access to research resources.

Furthermore, bibliometric data can be sensitive and confidential, especially when it involves personal information about authors, institutions, and funding agencies. Therefore, it is important to ensure that bibliometric data is collected, analyzed, and disseminated in a secure and ethical manner.

To address these ethical concerns, several guidelines and best practices have been developed for bibliometric practices and activities. For instance, the San Francisco Declaration on Research Assessment (DORA) was developed in 2012 to promote the responsible use of bibliometric indicators in research evaluation. DORA advocates for the use of multiple indicators to evaluate research quality and impact, rather than relying solely on bibliometric indicators such as the impact factor.

Other guidelines and best practices include the Leiden Manifesto for Research Metrics, the Responsible Metrics Movement, and the European Code of Conduct for Research Integrity. These guidelines emphasize the need for transparency, accountability, and ethical considerations in bibliometric practices and activities.

In conclusion, bibliometric practices and activities play an important role in evaluating research output and measuring the impact of research. Bibliometric analysis, bibliometric indicators, and bibliometric tools are widely used in academic and research institutions to assess the quality and importance of scientific publications and individual authors. However, bibliometric practices and activities raise several ethical considerations, including biases, discrimination, and the use of bibliometric data in decision-making. To address these concerns, guidelines and best practices have been developed to promote the responsible use of bibliometric indicators and to ensure that bibliometric data is collected, analyzed, and disseminated in an ethical and secure manner.

References:

- Bornmann, L., & Daniel, H. D. (2008). What do citation counts measure? A review of studies on citing behavior. Journal of Documentation, 64(1), 45-80. doi: 10.1108/00220410810844150

- Hicks, D., Wouters, P., Waltman, L., de Rijcke, S., & Rafols, I. (2015). Bibliometrics: The Leiden Manifesto for research metrics. Nature, 520(7548), 429-431. doi: 10.1038/520429a

- Ioannidis, J. P. A. (2005). Why most published research findings are false. PLOS Medicine, 2(8), e124. doi: 10.1371/journal.pmed.0020124

- Larivière, V., Haustein, S., & Mongeon, P. (2015). The oligopoly of academic publishers in the digital era. PLOS ONE, 10(6), e0127502. doi: 10.1371/journal.pone.0127502

- National Science Board. (2018). Science and Engineering Indicators 2018. Retrieved from https://www.nsf.gov/statistics/2018/nsb20181/

- San Francisco Declaration on Research Assessment. (2012). Retrieved from https://sfdora.org/read/

- van Leeuwen, T. N. (2013). Bibliometric research evaluations, Web of Science and the Social Science and Humanities: A problematic relationship? Journal of the Association for Information Science and Technology, 64(2), 352-362. doi: 10.1002/asi.22791

- Wilsdon, J., Allen, L., Belfiore, E., Campbell, P., Curry, S., Hill, S., … & Watermeyer, R. (2015). The metric tide: Report of the Independent Review of the Role of Metrics in Research Assessment and Management. Retrieved from https://doi.org/10.13140/RG.2.1.4929.1363

Former Student at Rajshahi University