How to Distributed Database Work:

A distributed database is a database that is spread across multiple sites or nodes, and the data is stored in a way that allows for processing and storage to be distributed among nodes. This architecture provides several advantages, such as improved performance, fault tolerance, and scalability. In this article, we will explore how to distributed database work.

1. Data Distribution:

1.1 Partitioning/Sharding: In a distributed database, partitioning (or sharding) involves breaking down the entire dataset into smaller, more manageable pieces called partitions or shards. Each partition is then assigned to a specific node within the distributed system.

Objective: The primary goal of partitioning is to distribute the data workload across multiple nodes, preventing a single node from becoming a bottleneck and improving overall system performance.

Partitioning Strategies:

- Hash-based Partitioning: The hash value of a particular attribute (e.g., customer ID) is used to determine the partition to which a record belongs. This ensures a relatively uniform distribution of data.

- Range-based Partitioning: Data is partitioned based on specific ranges of attribute values. For example, a partition may contain all records where the customer ID falls within a certain range.

- List-based Partitioning: Data is partitioned based on predefined lists of values for a particular attribute. Each partition is associated with a specific list of attribute values.

1.2 Replication: Replication involves creating and maintaining multiple copies of the same data on different nodes within the distributed database.

Objective: The main purpose of replication is to enhance fault tolerance and increase read performance. If one node fails, other nodes can still provide access to the replicated data. Additionally, read operations can be distributed among multiple nodes, reducing the load on any single node.

Types of Replication:

- Full Replication: All nodes maintain a complete copy of the entire dataset. This provides high fault tolerance but requires significant storage resources.

- Partial Replication: Only a subset of the data is replicated across nodes. This approach balances fault tolerance and resource utilization.

- Master-Slave Replication: One node (master) is responsible for handling write operations, and changes are propagated to other nodes (slaves). Read operations can be distributed among the slave nodes.

- Multi-Master Replication: Multiple nodes can handle both read and write operations independently, and changes are synchronized among all nodes.

1.3 Data Distribution Considerations:

Load Balancing: It’s crucial to balance the data workload evenly across all nodes to prevent performance bottlenecks. Dynamic load balancing mechanisms may be employed to adjust the distribution based on changing workloads.

Data Size and Access Patterns: The choice of partitioning strategy and replication mechanism depends on the size of the dataset and the expected access patterns (read-heavy, write-heavy, or balanced).

Consistency Trade-offs: Replication introduces the challenge of maintaining consistency between copies of data. Depending on the desired consistency model, systems may opt for eventual consistency or stronger consistency guarantees.

2. Transaction Management:

2.1 Definition of a Transaction: In a database context, a transaction is a logical unit of work that consists of one or more operations (read, write, update) executed on the database. Transactions ensure the ACID properties: Atomicity, Consistency, Isolation, and Durability.

ACID Properties:

- Atomicity: Transactions are treated as atomic units, meaning that all operations within a transaction are executed as a single, indivisible unit. If any part of the transaction fails, the entire transaction is rolled back to its original state.

- Consistency: Transactions bring the database from one consistent state to another. The integrity constraints of the database are maintained throughout the transaction.

- Isolation: Transactions execute independently of each other. The intermediate state of a transaction is not visible to other transactions until the transaction is committed.

- Durability: Once a transaction is committed, its effects on the database are permanent and survive subsequent system failures.

2.2 Challenges in Distributed Transactions:

Coordination and Consistency: Ensuring consistency in a distributed environment is challenging due to the need for coordination among multiple nodes. Different nodes may execute parts of a transaction, and coordinating their actions is essential.

Concurrency Control: Multiple transactions executing concurrently may lead to conflicts and inconsistencies. Concurrency control mechanisms, such as locking or timestamp ordering, are necessary to manage concurrent access to shared resources.

Two-Phase Commit Protocol: A widely used protocol for ensuring atomicity in distributed transactions is the Two-Phase Commit (2PC) protocol. It involves a coordinator node and participant nodes. In the first phase, the coordinator asks participants if they can commit. In the second phase, based on the responses, the coordinator decides whether to commit or abort the transaction.

2.3 Distributed Transaction Management:

Global Transaction Identifier (GTID): A unique identifier assigned to each transaction to distinguish it from others. The GTID helps in tracking the progress and status of a transaction across distributed nodes.

Transaction Manager: In a distributed environment, a transaction manager is responsible for coordinating the execution of transactions across multiple nodes. It ensures that all nodes agree on whether to commit or abort a transaction.

Distributed Locking: To maintain isolation between transactions, distributed databases often employ locking mechanisms. Locks are acquired on data items to prevent conflicting access by multiple transactions.

2.4 Optimistic Concurrency Control: In contrast to pessimistic concurrency control (locking), optimistic concurrency control assumes that conflicts between transactions are rare. Transactions are executed without acquiring locks, and conflicts are detected during the commit phase.

Conflict Resolution: If conflicts are detected, the system resolves them by either aborting one of the conflicting transactions or merging their changes in a way that preserves consistency.

2.5 CAP Theorem:

Theoretical Framework: The CAP theorem states that it is impossible for a distributed system to simultaneously provide all three guarantees of Consistency, Availability, and Partition Tolerance. Distributed databases often make trade-offs based on the CAP theorem, choosing to prioritize one or two of these guarantees.

2.6 In-Memory Transactions:

Memory-Optimized Databases: Some distributed databases leverage in-memory technologies for transaction processing, enabling faster data access and manipulation.

Snapshot Isolation: In-memory databases often use snapshot isolation, allowing transactions to work with a consistent snapshot of the data without locking.

3. Concurrency Control:

3.1 Concurrency Challenges in Distributed Databases:

Simultaneous Transactions: In a distributed environment, multiple transactions can execute concurrently on different nodes, potentially leading to conflicts and data inconsistencies.

Isolation Requirements: Ensuring the isolation of transactions, as per the ACID properties, is crucial to prevent interference between concurrently executing transactions.

3.2 Distributed Locking: Distributed locking is a mechanism used to control access to shared resources in a distributed database. It prevents conflicts by ensuring that only one transaction can access a particular resource at a time.

Types of Locks:

- Shared Locks: Multiple transactions can hold a shared lock on a resource simultaneously. This is typically used for read operations.

- Exclusive Locks: Only one transaction can hold an exclusive lock on a resource at a time. This is used for write operations.

3.3 Two-Phase Locking (2PL):

Principle: In a distributed environment, the Two-Phase Locking protocol is often employed to ensure transaction isolation. It consists of two phases: the growing phase (acquiring locks) and the shrinking phase (releasing locks). Once a transaction releases a lock, it cannot acquire new locks.

Deadlock Handling: Deadlocks, where transactions are waiting for locks held by each other, can occur. Distributed databases may employ deadlock detection and resolution mechanisms to address this issue.

3.4 Timestamp Ordering: Timestamp ordering is an optimistic concurrency control technique where each transaction is assigned a unique timestamp. Transactions are ordered based on their timestamps, and conflicts are resolved by comparing timestamps.

Read and Write Timestamps: The read timestamp ensures that a transaction reads a consistent snapshot of the database, while the write timestamp ensures that updates are applied in a consistent order.

3.5 Snapshot Isolation: Snapshot isolation allows transactions to work with a snapshot of the database at the start of the transaction. This snapshot remains consistent throughout the transaction, even if other transactions modify the data concurrently.

Read Consistency: Snapshot isolation provides a high level of read consistency without the need for locking. However, it may allow certain types of anomalies in write operations.

3.6 Optimistic Concurrency Control: Optimistic concurrency control assumes that conflicts between transactions are infrequent. Transactions proceed without acquiring locks, and conflicts are detected during the commit phase.

Conflict Detection: Techniques such as versioning or validation checks are employed to detect conflicts. If a conflict is detected, the system resolves it by aborting one of the conflicting transactions or merging their changes.

3.7 Consistency Models:

Eventual Consistency: In a distributed database, eventual consistency is a relaxed consistency model where all replicas will eventually converge to the same state. This model allows for high availability and fault tolerance but may result in temporary inconsistencies during updates.

Strong Consistency: Strong consistency models guarantee that all nodes in the distributed system have the same view of the data at all times. Achieving strong consistency may involve trade-offs in terms of performance and availability.

4. Distributed Query Processing:

4.1 Overview of Distributed Query Processing: Distributed query processing involves optimizing and executing queries that span multiple nodes in a distributed database.

Query Distribution: Queries can be distributed across nodes to leverage parallel processing and reduce overall query execution time.

Challenges: Distributed query processing must address challenges such as data distribution, network latency, and the coordination of intermediate results.

4.2 Query Optimization:

Query Plan: The database system generates a query plan, which is a sequence of operations to execute the query. Each operation can be executed on a different node.

Cost-Based Optimization: Optimizers analyze various execution plans and choose the one with the lowest estimated cost based on factors such as data distribution, indexing, and network latency.

Parallel Execution: Queries can be parallelized by distributing subtasks across multiple nodes. This requires efficient coordination and synchronization mechanisms.

4.3 Data Localization:

Data Movement: Minimizing data movement across the network is essential for performance. Data localization aims to execute queries on nodes where the required data is already present, reducing the need for data transfer.

Partition Pruning: In partitioned databases, unnecessary partitions are excluded from query execution based on the query predicates, reducing the amount of data to be processed.

4.4 Distributed Joins:

Join Operations: Joining tables distributed across different nodes requires careful optimization to minimize data movement.

Broadcast Join: The smaller table is broadcasted to all nodes containing the larger table, reducing the need for extensive data transfer. This is effective when one table is significantly smaller than the other.

Partitioned Join: Both tables are partitioned based on the join key, and corresponding partitions are joined on each node. This approach is suitable when both tables are of comparable size.

4.5 Network Awareness:

Network Latency: Distributed query processing must consider the latency introduced by network communication. Minimizing the amount of data transferred and optimizing communication patterns are crucial for performance.

Data Compression: Techniques such as data compression can be employed to reduce the volume of data transferred over the network.

4.6 Caching and Materialized Views:

Query Result Caching: Storing the results of frequently executed queries can improve performance by reducing the need to re-compute the same results.

Materialized Views: Precomputed views of data are stored, and queries can be directed to these views when appropriate. This reduces the need to perform expensive computations during query execution.

4.7 Global Query Optimization:

Centralized vs. Distributed Optimization: In some distributed databases, query optimization can be performed centrally by a query coordinator, while in others, each node may optimize its part of the query independently.

Global Cost Models: Considering the global cost of query execution, including data transfer costs and local processing costs, is essential for effective distributed query optimization.

4.8 Examples of Distributed Database Systems:

Google Bigtable: A distributed storage system used by Google for large-scale data processing.

Apache Hadoop: An open-source framework for distributed storage and processing of large datasets.

Amazon Redshift: A fully managed data warehouse service that operates in a distributed manner for high-performance analytics.

5. Communication Protocols:

5.1 Importance of Communication in Distributed Databases:

Coordination: Nodes in a distributed database need to coordinate their actions, share information, and communicate to ensure consistent data management.

Data Transfer: Efficient communication is vital for transmitting data between nodes, especially in scenarios involving distributed transactions or distributed query processing.

5.2 Message Passing: Message passing is a communication paradigm where nodes in a distributed system communicate by sending and receiving messages.

Synchronous vs. Asynchronous: Communication can be synchronous (blocking) or asynchronous (non-blocking), depending on whether the sender waits for a response.

Protocols: Well-defined communication protocols ensure that messages are formatted and interpreted consistently across nodes.

5.3 Remote Procedure Call (RPC): RPC allows a program to cause a procedure (subroutine) to execute on another address space as if it were a local procedure call, hiding the distributed nature of the interaction.

Stub: A stub on the client side and a skeleton on the server side handle the details of marshaling and un-marshaling parameters, making the procedure call appear local.

5.4 Publish-Subscribe Model: In a publish-subscribe model, nodes can subscribe to specific events or topics. Publishers send messages related to those events, and subscribers receive relevant messages.

Decoupling: This model decouples the sender (publisher) from the receiver (subscriber), allowing for a more flexible and scalable communication pattern.

5.5 Middleware and Message Queues:

Middleware: Middleware provides a layer of software that facilitates communication and data management between distributed components.

Message Queues: Message queues enable asynchronous communication by allowing nodes to send messages to a queue, which can be consumed by other nodes at their own pace.

5.6 RESTful APIs:

REST Architecture: Representational State Transfer (REST) is an architectural style that uses a stateless, client-server communication model.

HTTP Methods: RESTful APIs use standard HTTP methods (GET, POST, PUT, DELETE) for communication, making them widely compatible and accessible.

5.7 Data Serialization: Data serialization is the process of converting data structures or objects into a format that can be easily transmitted and reconstructed at the receiving end.

JSON, XML, Protocol Buffers: Common serialization formats include JSON (JavaScript Object Notation), XML (eXtensible Markup Language), and Protocol Buffers, each with its advantages and use cases.

5.8 Security Protocols:

Encryption: Communication between nodes in a distributed database often involves encryption to secure data in transit.

Authentication: Nodes must authenticate each other to ensure that communication is between trusted entities.

Secure Sockets Layer (SSL) and Transport Layer Security (TLS): Protocols like SSL and TLS provide secure communication channels over the Internet.

5.9 Coordination Protocols:

Two-Phase Commit Protocol (2PC): Ensures atomicity in distributed transactions by coordinating commit or abort decisions among nodes.

Paxos and Raft: Consensus algorithms like Paxos and Raft are used for reaching an agreement among distributed nodes, providing fault tolerance and consistency.

5.10 Examples of Communication Libraries:

Apache Thrift: An open-source framework for building cross-language services.

gRPC: A high-performance, open-source RPC framework developed by Google.

ZeroMQ: A lightweight messaging library for distributed applications.

6. Fault Tolerance:

6.1 Importance of Fault Tolerance in Distributed Databases: Fault tolerance refers to a system’s ability to continue operating in the presence of failures or errors.

Critical for Reliability: Distributed databases must be fault-tolerant to ensure data availability, consistency, and reliability, especially in large-scale systems where hardware failures, network issues, or software errors are inevitable.

6.2 Replication for Fault Tolerance:

Replication Basics: Maintaining multiple copies (replicas) of data across different nodes improves fault tolerance.

Read and Write Operations: Replicas can be used to distribute read operations, enhancing performance, and to provide data redundancy for write operations, ensuring data durability.

6.3 Quorum-Based Systems: Quorum systems require a minimum number of nodes to agree on an operation for it to be considered successful.

Read and Write Quorums: Quorums can be defined for read and write operations separately. For example, a write may require a majority of replicas to agree (write quorum), while a read may require a smaller subset (read quorum).

6.4 Consensus Algorithms:

Role in Fault Tolerance: Consensus algorithms are crucial for reaching agreement among distributed nodes, especially in the presence of failures.

Examples: Paxos and Raft are consensus algorithms used to ensure that a distributed system agrees on a single, consistent result even if some nodes fail.

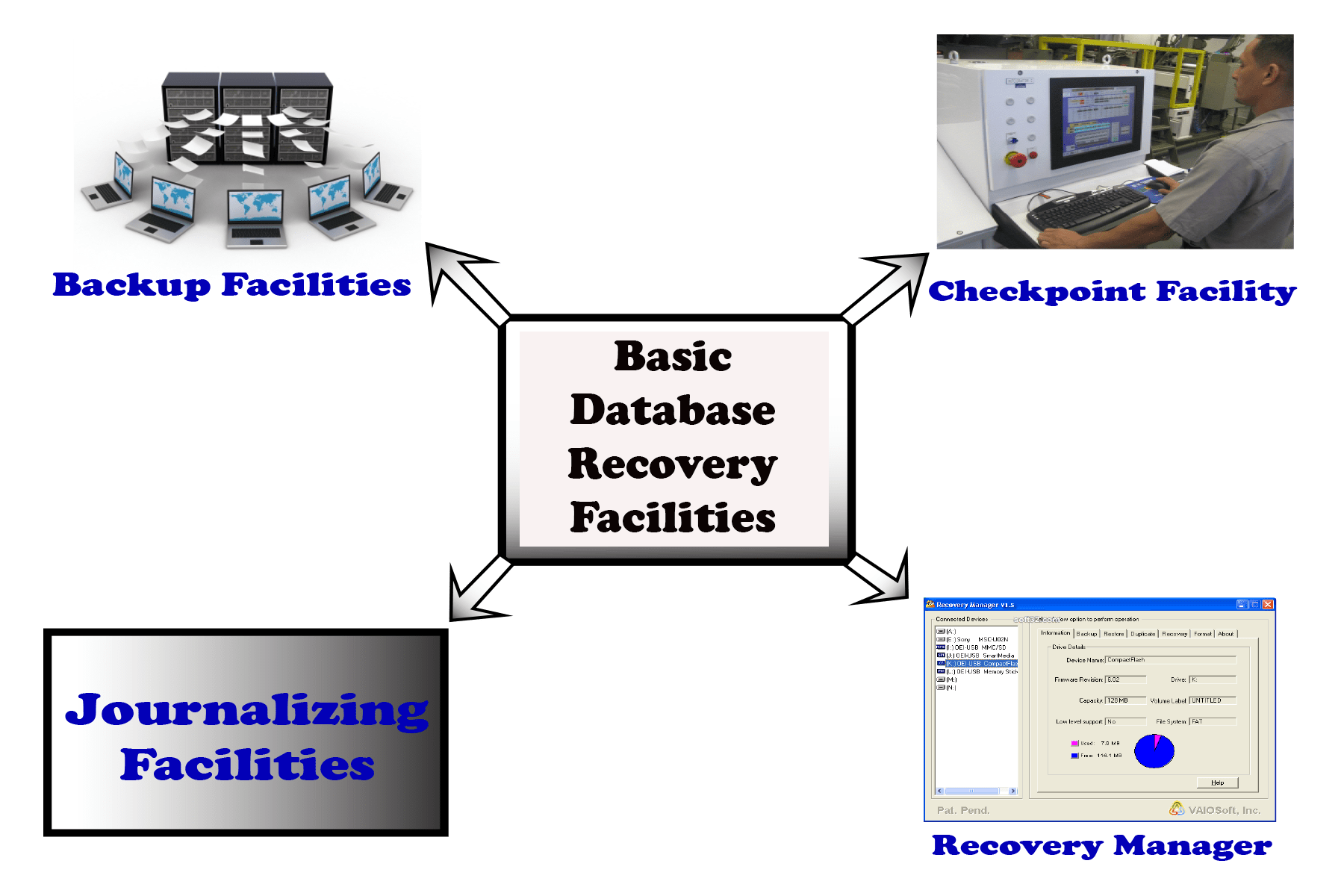

6.5 Data Recovery and Redundancy:

Backup Strategies: Regular backups of data are essential for recovery in case of data corruption, accidental deletions, or catastrophic failures.

Redundancy: Redundant hardware, such as power supplies and network connections, can prevent single points of failure.

6.6 Automated Failure Detection and Recovery:

Failure Detection: Automated systems continuously monitor the health of nodes and detect failures promptly.

Recovery Mechanisms: Automated recovery mechanisms can include node replacements, data restoration from backups, and reconfiguration of the system to adapt to the changes in the network.

6.7 Load Balancing for Fault Tolerance: Load balancing ensures that the workload is distributed evenly across all nodes, preventing individual nodes from becoming overloaded.

Dynamic Load Balancing: Systems can adapt to changes in load by dynamically redistributing data or adjusting the allocation of resources among nodes.

6.8 Consistency Models and Fault Tolerance Trade-offs:

Eventual Consistency: Systems that prioritize eventual consistency may sacrifice immediate consistency for improved fault tolerance and availability.

CAP Theorem Revisited: The CAP theorem suggests that achieving both consistency and availability in the presence of network partitions is challenging, forcing systems to make trade-offs.

6.9 Geographical Distribution:

Disaster Recovery: Geographically distributed databases can enhance fault tolerance by providing disaster recovery capabilities. Data is replicated across different geographical locations to mitigate the impact of regional disasters.

6.10 Example of Fault-Tolerant Architectures:

Amazon DynamoDB: A fully managed NoSQL database service designed for high availability and fault tolerance. It uses a combination of partitioning, replication, and quorum-based techniques to ensure resilience.

7. Consistency Models:

7.1 Consistency in Distributed Databases: Consistency refers to the agreement of all nodes in a distributed system on the current state of the data. It ensures that all nodes see a consistent view of the data despite concurrent updates.

7.2 Eventual Consistency: Eventual consistency is a relaxed consistency model that allows replicas of data to become consistent over time, even in the presence of network partitions or updates.

Trade-offs: It prioritizes availability and partition tolerance over immediate consistency, accepting that nodes might temporarily have different views of the data.

7.3 Strong Consistency: Strong consistency ensures that all nodes in the system have a synchronized view of the data at all times.

Linearizability: A stronger form of consistency where operations appear to be instantaneous and appear to take effect at a single point in time between their invocation and response.

7.4 Causal Consistency: Causal consistency ensures that operations that are causally related are seen by all nodes in a consistent order.

Causally Related Operations: If operation A causally precedes operation B, then all nodes must agree on the order in which they see A and B.

7.5 Read and Write Consistency:

Read Consistency: Specifies the guarantees provided when reading data. It may involve ensuring that a read operation reflects the most recent write or that it returns a value not older than a certain timestamp.

Write Consistency: Specifies the guarantees provided when writing data. It may involve ensuring that a write is visible to all nodes before acknowledging its success.

7.6 Brewer’s CAP Theorem:

Theoretical Framework: Brewer’s CAP theorem states that a distributed system can achieve at most two out of three guarantees: Consistency, Availability, and Partition Tolerance.

Trade-offs: The theorem highlights the inherent trade-offs that distributed databases must make in the face of network partitions.

7.7 BASE Model:

BASE vs. ACID: While ACID (Atomicity, Consistency, Isolation, Durability) properties provide strong guarantees, the BASE model (Basically Available, Soft state, Eventually consistent) relaxes some of these constraints in favor of availability and partition tolerance.

Soft State: Systems may exhibit temporary inconsistency, and the state is considered soft or adaptable over time.

7.8 Optimistic vs. Pessimistic Consistency:

Optimistic Consistency: Assumes that conflicts between concurrent transactions are infrequent. Conflicts, if detected, are resolved during the commit phase.

Pessimistic Consistency: Involves locking mechanisms to prevent conflicts and maintain consistency throughout the execution of transactions.

7.9 Consistency Levels in Distributed Databases:

Read Consistency Levels: Specifies how many nodes must respond to a read operation before it is considered successful. For example, a quorum of nodes may be required.

Write Consistency Levels: Specifies how many nodes must acknowledge a write operation before it is considered successful.

7.10 Examples of Consistency Models in Databases:

Amazon DynamoDB: Offers configurable consistency levels, allowing users to choose between eventually consistent reads, strongly consistent reads, and other options.

Cassandra: Supports tunable consistency, enabling users to balance between consistency and availability by configuring read and write consistency levels.

8. Global Transaction Management:

8.1 Overview of Global Transaction Management: Global transaction management involves coordinating and managing transactions that span multiple nodes in a distributed database. Ensuring the ACID properties (Atomicity, Consistency, Isolation, Durability) across these distributed transactions is a complex task.

8.2 Global Transaction Identifier (GTID):

Role of GTID: A Global Transaction Identifier is a unique identifier assigned to each transaction to distinguish it from others. It helps in tracking the progress and status of a transaction across distributed nodes.

Global Uniqueness: GTIDs are globally unique to prevent conflicts and ensure that each transaction is identified uniquely.

8.3 Transaction Manager: The transaction manager is a central component responsible for coordinating the execution of distributed transactions.

Functions:

- Initiation: Starts the transaction and assigns a GTID.

- Coordination: Ensures that all nodes involved in the transaction reach a consensus on committing or aborting.

- Commit or Abort Decision: Based on the responses from participating nodes, the transaction manager decides whether to commit or abort the transaction.

8.4 Two-Phase Commit Protocol (2PC): 2PC is a widely used protocol for achieving atomicity in distributed transactions. It involves two phases: the prepare phase and the commit (or abort) phase.

Prepare Phase: The transaction manager asks all participating nodes if they are ready to commit. Nodes reply with either “yes” or “no.”

Commit Phase: If all nodes respond with “yes,” the transaction manager instructs all nodes to commit. If any node responds with “no,” the transaction manager instructs all nodes to abort.

8.5 Three-Phase Commit Protocol (3PC):

Enhancement of 2PC: 3PC is an extension of the 2PC protocol that introduces a third phase, the “pre-commit” phase, to address some of the limitations of 2PC.

Pre-Commit Phase: After the prepare phase, there is an additional phase where nodes acknowledge their prepared state. This helps in handling scenarios where nodes may crash after indicating readiness in 2PC.

8.6 Heuristics and Transaction Recovery:

Heuristic Commit and Abort: Heuristic decisions occur when some nodes commit while others abort, leading to an inconsistent state. Heuristic commit and heuristic abort are strategies for handling such situations.

Transaction Recovery: In the event of failures, transaction recovery mechanisms are employed to bring the system back to a consistent state. This may involve rolling back incomplete transactions or committing prepared transactions.

8.7 Distributed Locking and Isolation:

Distributed Locking: Ensures that transactions have a consistent view of the data and prevents conflicts. Distributed locks are acquired and released in coordination with the transaction manager.

Isolation Levels: The transaction manager plays a role in enforcing isolation levels to control the visibility of intermediate transaction states to other transactions.

8.8 X/Open XA Standard:

XA Interface: The XA standard defines an interface between a transaction manager and a resource manager (database or other transactional resource).

Two-Phase Commit: XA provides a framework for implementing the two-phase commit protocol.

8.9 Challenges in Global Transaction Management:

Concurrency Control: Coordinating concurrent transactions across multiple nodes requires robust concurrency control mechanisms.

Fault Tolerance: Handling failures, network partitions, and ensuring recovery without violating the ACID properties pose significant challenges.

8.10 Examples of Distributed Database Systems with Global Transaction Management:

Oracle Distributed Database: Oracle provides a distributed database solution with support for global transactions and distributed transaction management.

Microsoft SQL Server: SQL Server supports distributed transactions using the Microsoft Distributed Transaction Coordinator (MSDTC).

9. Scalability in Distributed Databases:

9.1 Definition of Scalability: Scalability is the ability of a system to handle an increasing amount of workload or growing number of users by adding resources without significantly affecting performance.

9.2 Horizontal and Vertical Scaling:

Horizontal Scaling (Scale-Out): Involves adding more nodes to a distributed system to increase capacity. It is often achieved by adding more servers or instances.

Vertical Scaling (Scale-Up): Involves increasing the resources of individual nodes, such as adding more CPU, memory, or storage to a single server.

9.3 Data Partitioning and Sharding:

Data Partitioning: Dividing the dataset into smaller partitions to distribute the workload across multiple nodes. Each node is responsible for a subset of the data.

Sharding: Another term for data partitioning, where each partition is often referred to as a shard.

9.4 Load Balancing:

Role of Load Balancing: Load balancing ensures that the workload is distributed evenly across all nodes in a distributed system.

Dynamic Load Balancing: Systems can adapt to changes in workload by dynamically redistributing data or adjusting the allocation of resources among nodes.

9.5 Elasticity:

Elastic Scaling: Elasticity refers to the ability of a system to automatically adapt to changes in workload by dynamically provisioning or de-provisioning resources.

Auto-Scaling: Automated mechanisms that scale the system based on predefined criteria, such as increased traffic or resource usage.

9.6 Shared-Nothing Architecture: Shared-nothing architecture ensures that each node in the distributed system operates independently and does not share memory or disk storage with other nodes.

Advantages: Simplifies scaling, as adding new nodes does not require shared resources. It also enhances fault isolation.

9.7 Consistent Hashing: Consistent hashing is a technique used in distributed databases to map keys or data to nodes in a way that minimizes the amount of data movement when nodes are added or removed.

Benefits: It helps in maintaining a balanced distribution of data, even when the number of nodes changes.

9.8 CAP Theorem and Scalability:

Trade-offs in CAP Theorem: The CAP theorem suggests that distributed systems can achieve at most two out of three guarantees: Consistency, Availability, and Partition Tolerance.

Impact on Scalability: The choice of consistency and availability trade-offs can influence the scalability characteristics of a distributed database.

9.9 Microservices and Scalability:

Microservices Architecture: In a microservices architecture, different components of an application are developed and deployed independently, which can enhance scalability.

Independent Scaling: Each microservice can be scaled independently based on its specific resource needs.

9.10 Challenges in Scalability:

Data Distribution Complexity: Distributing and partitioning data across nodes can be complex, especially when dealing with changing workloads.

Coordination Overhead: As the number of nodes increases, the overhead of coordinating activities, such as distributed transactions or consensus, may impact scalability.

9.11 Examples of Scalable Database Systems:

Apache Cassandra: A NoSQL database designed for high scalability and fault tolerance.

Google Bigtable: A distributed storage system that can scale horizontally to handle massive amounts of data.

10. Security in Distributed Databases:

10.1 Security Challenges in Distributed Databases:

Network Vulnerabilities: Communication between distributed nodes can be intercepted, leading to potential security threats.

Data Distribution: The distribution of data across multiple nodes increases the risk of unauthorized access or data breaches.

Coordination Protocols: Protocols used for distributed coordination, such as consensus algorithms, need to be secure to prevent attacks.

10.2 Encryption and Secure Communication:

Encryption at Rest: Protects data stored on disk by encrypting it, ensuring that even if physical access is gained, the data remains secure.

Encryption in Transit: Ensures that data is encrypted during transmission between nodes, preventing eavesdropping or interception.

10.3 Authentication and Authorization:

Authentication: Verifying the identity of users or nodes to ensure that only authorized entities access the system.

Authorization: Determining the permissions and privileges of authenticated entities, specifying what actions they are allowed to perform.

10.4 Role-Based Access Control (RBAC): RBAC is a security model where access permissions are tied to roles, and users or nodes are assigned specific roles based on their responsibilities.

Granular Access Control: RBAC allows for fine-grained access control by assigning roles with specific privileges.

10.5 Secure Distributed Transactions:

Secure Communication: Ensuring that communication during distributed transactions is secure, preventing tampering or interception.

Transaction Integrity: Guaranteeing the integrity of transactions, even in the presence of malicious nodes or network attacks.

10.6 Secure Data Replication:

Replication Security: Securing the process of replicating data across nodes to prevent unauthorized access to replicas.

Consistency and Security: Balancing the need for data consistency with security measures to ensure that replicas remain secure and consistent.

10.7 Auditing and Logging:

Audit Trails: Logging and auditing mechanisms help track and monitor user activities and system events.

Compliance Requirements: Auditing supports compliance with regulatory requirements by providing a record of system activities.

10.8 Secure Protocols for Distributed Systems:

TLS/SSL: Transport Layer Security (TLS) and its predecessor, Secure Sockets Layer (SSL), are widely used to secure communication between nodes.

Kerberos: A network authentication protocol that provides secure authentication for distributed systems.

10.9 Data Privacy and Compliance:

Privacy Concerns: Distributed databases often handle sensitive data, requiring measures to protect individual privacy and comply with data protection regulations.

GDPR, HIPAA, etc.: Compliance with regulations such as the General Data Protection Regulation (GDPR) or the Health Insurance Portability and Accountability Act (HIPAA) is crucial.

10.10 Intrusion Detection and Prevention:

Detection Systems: Implementing intrusion detection systems to identify and respond to security threats in real-time.

Prevention Measures: Proactive measures to prevent security breaches, such as firewalls, access controls, and security policies.

10.11 Examples of Secure Distributed Database Systems:

Microsoft Azure Cosmos DB: A globally distributed, multi-model database service with built-in security features, including encryption, authentication, and auditing.

MongoDB Atlas: A managed MongoDB service that provides security features like encryption in transit, encryption at rest, and role-based access control.

In conclusion, distributed databases leverage data distribution, transaction management, concurrency control, communication protocols, fault tolerance, consistency models, global transaction management, scalability, and security mechanisms to provide efficient and reliable data storage and processing across multiple nodes. The design considerations depend on the specific requirements of the application and the desired trade-offs between performance, consistency, and fault tolerance.

Library Lecturer at Nurul Amin Degree College