Supervised Machine Learning:

Supervised learning is the types of machine learning in which machines are trained using well “labelled” training data, and on basis of that data, machines predict the output. Supervised machine learning involves training machines using labeled data, where inputs are tagged with correct outputs. This data acts as a guide, enabling machines to predict outcomes accurately. Similar to a student learning under a teacher’s guidance, supervised learning pairs input and output data for the model to learn. The goal is to establish a mapping between input (x) and output (y). In the real-world, supervised learning can be used for Risk Assessment, Image classification, Fraud Detection, spam filtering, etc.

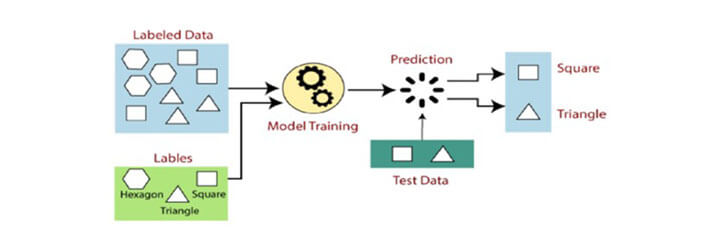

How Supervised Learning Works:

In supervised learning, models are trained using labeled datasets, where the model learns about each type of data. Once the training process is completed, the model is tested on the basis of test data (a subset of the training set), and then it predicts the output.

The working of Supervised learning can be easily understood by the below example and diagram:

Suppose we have a dataset of different types of shapes which includes square, rectangle, triangle, and Polygon. Now the first step is that we need to train the model for each shape.

- If the given shape has four sides, and all the sides are equal, then it will be labeled as a Square.

- If the given shape has three sides, then it will be labeled as a triangle.

- If the given shape has six equal sides then it will be labeled as a hexagon.

Now, after training, we test our model using the test set, and the task of the model is to identify the shape.

The machine is already trained on all types of shapes, and when it finds a new shape, it classifies the shape on the basis of a number of sides and predicts the output.

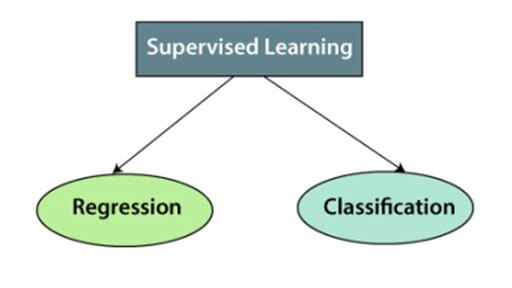

Types of Supervised Machine Learning Algorithms:

Supervised learning can be further divided into two types of problems:

1. Regression: Regression is a method in machine learning for predicting continuous outcomes based on input variables. It’s applied to scenarios like weather forecasting and market trends. Below are some popular Regression algorithms which come under supervised learning:

a) Linear Regression:

- Description: Linear regression models the relationship between the input features and a continuous target variable by fitting a linear equation.

- Use Cases: Predicting house prices, stock prices, temperature, etc.

b) Decision Trees for Regression (CART):

- Description: Decision trees are used for regression tasks, where each leaf node represents a numerical value.

- Use Cases: Predicting sales, product demand, or any numerical value.

c) Random Forest Regression:

- Description: An ensemble of decision trees for regression that reduces overfitting and improves accuracy.

- Use Cases: Predicting real estate prices, financial forecasting.

d) Support Vector Regression (SVR):

- Description: An extension of Support Vector Machines (SVM) for regression tasks, it finds a hyperplane that best fits the data.

- Use Cases: Predicting stock prices, quality control.

e) Polynomial Regression:

- Description: Extends linear regression by considering polynomial relationships between input features and the target variable.

- Use Cases: Modeling non-linear relationships in data.

f) Ridge Regression:

- Description: Adds L2 regularization to linear regression to prevent overfitting by penalizing large coefficients.

- Use Cases: When dealing with multicollinearity in feature data.

g) Lasso Regression:

- Description: Similar to Ridge, but uses L1 regularization to encourage sparsity in the model.

- Use Cases: Feature selection and when you want a more interpretable model.

h) ElasticNet Regression:

- Description: Combines L1 and L2 regularization to balance their effects in the model.

- Use Cases: When a dataset has many features and you want to control both bias and variance.

i) K-Nearest Neighbors (KNN) Regression:

- Description: Predicts the target based on the average (or weighted average) of the k-nearest data points in the training set.

- Use Cases: Predicting housing prices based on similar neighborhood properties.

2. Classification: Classification algorithms are used when the output variable is categorical, which means there are two classes such as Yes-No, Male-Female, True-false, etc. Below are some popular Classification algorithms which come under supervised learning:

a) Logistic Regression:

- Description: Used for binary classification, estimating the probability of an instance belonging to a particular class.

- Use Cases: Spam detection, credit risk assessment.

b) Decision Trees for Classification:

- Description: Decision trees are used for classification tasks, with each leaf node representing a class label.

- Use Cases: Customer churn prediction, sentiment analysis.

c) Random Forest:

- Description: An ensemble of decision trees for classification that reduces overfitting and improves accuracy.

- Use Cases: Image classification, disease diagnosis.

d) Support Vector Machines (SVM):

- Description: Effective for binary and multi-class classification by finding hyperplanes that maximize the margin between classes.

- Use Cases: Handwriting recognition, image classification.

e) K-Nearest Neighbors (KNN) Classification:

- Description: Assigns the majority class among the k-nearest neighbors of a new data point.

- Use Cases: Recommender systems, text categorization.

f) Naive Bayes:

- Description: Based on Bayes’ theorem, used for classification problems with strong independence assumptions among features.

- Use Cases: Spam detection, sentiment analysis.

g) Gradient Boosting (e.g., XGBoost, LightGBM):

- Description: Iteratively improves decision tree performance by combining weak learners.

- Use Cases: Click-through rate prediction, anomaly detection.

h) Neural Networks (Deep Learning):

- Description: Deep learning models with many layers are used for complex classification tasks, image recognition, and natural language processing.

i) Adaboost:

- Description: An ensemble technique that combines weak classifiers to create a strong classifier.

- Use Cases: Face detection, object recognition.

j) Multinomial Logistic Regression (Softmax Regression):

- Description: Extends logistic regression to handle multi-class classification problems.

- Use Cases: Image classification, natural language processing.

k) Linear Discriminant Analysis (LDA):

- Description: Reduces dimensionality while preserving class separability in multi-class problems.

- Use Cases: Medical diagnosis, facial recognition.

Advantages and Disadvantages of Supervised Learning:

Supervised learning, like any machine learning paradigm, comes with its own set of advantages and disadvantages. Understanding these can help you decide when to use supervised learning and how to address its limitations. Here are the advantages and disadvantages of supervised learning:

Advantages of Supervised Learning:

- Explicit Feedback: Supervised learning relies on labeled data, which provides explicit feedback on the model’s predictions. This feedback is valuable for model training and improvement.

- Predictive Accuracy: Supervised learning models can achieve high predictive accuracy when trained on high-quality, representative data. They are effective in tasks like classification and regression.

- Generalization: Well-trained supervised models can generalize their knowledge to make accurate predictions on new, unseen data points, making them suitable for real-world applications.

- Interpretability: Some supervised learning algorithms, like linear regression and decision trees, provide interpretable models that allow users to understand the relationships between input features and predictions.

- Wide Range of Applications: Supervised learning can be applied to a wide range of domains, including healthcare, finance, natural language processing, computer vision, and more.

- Availability of Tools and Libraries: There are numerous tools, libraries (e.g., sci-kit-learn, TensorFlow, PyTorch), and resources available for implementing and experimenting with supervised learning algorithms.

Disadvantages of Supervised Learning:

- Data Labeling Requirement: Supervised learning relies on labeled data, which can be expensive and time-consuming to obtain, especially for large datasets.

- Limited to Labeled Data: The model can only make predictions on data similar to what it was trained on, limiting its ability to handle novel or unexpected situations.

- Bias and Noise in Labels: If labeled data contains biases or errors, the model may learn and perpetuate those biases, leading to unfair or inaccurate predictions.

- Overfitting: There’s a risk of overfitting, where the model learns the training data too well, capturing noise rather than the underlying patterns. Regularization techniques are often required to mitigate this.

- Feature Engineering: Selecting and engineering relevant features is a crucial step in building effective supervised learning models. Poor feature selection can lead to suboptimal performance.

- Scalability: Training complex models with large datasets can be computationally expensive and time-consuming, requiring substantial computing resources.

- Limited to Labeled Data Distribution: Supervised models are constrained by the distribution of labeled data and may not perform well when faced with data from a different distribution.

- Privacy Concerns: In some applications, the use of labeled data may raise privacy concerns, as it can reveal sensitive information about individuals.

- Imbalanced Data: When dealing with imbalanced datasets (e.g., rare disease detection), supervised models may struggle to predict minority classes accurately.

- Concept Drift: Over time, the relationship between input features and the target variable may change (concept drift). Supervised models may require constant retraining to adapt to these changes.

Despite its drawbacks, supervised learning remains a powerful approach in machine learning.

In conclusion, supervised machine learning stands as a cornerstone in the realm of artificial intelligence, providing us with a powerful tool to harness the knowledge hidden within data. Through the guidance of labeled examples, it enables us to build predictive models capable of making accurate classifications and precise predictions. Its versatility spans across a myriad of domains, from healthcare and finance to natural language processing and computer vision. While the need for labeled data and the potential for biases remain challenges, supervised learning continues to empower us with the ability to distill valuable insights, drive informed decision-making, and propel innovations across industries. As we advance in the field, addressing these challenges and refining our methodologies, supervised learning continues to play a pivotal role in shaping the future of AI, unlocking the potential for unprecedented applications and advancements.

Assistant Teacher at Zinzira Pir Mohammad Pilot School and College