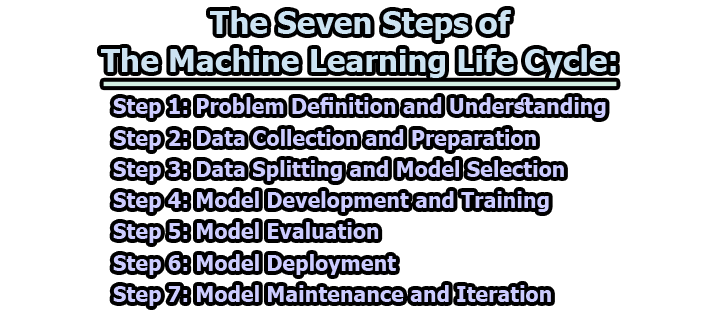

The Seven Steps of the Machine Learning Life Cycle:

The Machine Learning (ML) life cycle is a systematic process that outlines the stages and steps involved in developing and deploying machine learning models. It encompasses everything from problem definition to model deployment and maintenance. Following a structured ML life cycle ensures that the development process is efficient, effective, and produces reliable models. In this comprehensive guide, we will delve into the seven steps of the machine learning life cycle.

Step 1: Problem Definition and Understanding

At the heart of every successful machine learning project lies a clear understanding of the problem to be solved. In this initial step, the focus is on defining the problem, identifying the goals, and understanding the business context. This involves collaboration between domain experts, stakeholders, and data scientists.

a) Problem Statement: Clearly define the problem you want to address using machine learning. Is it a classification, regression, clustering, or some other type of problem?

b) Objectives: Determine the objectives of your machine learning project. What are you aiming to achieve? This could be improving accuracy, reducing costs, enhancing user experience, etc.

c) Scope and Constraints: Define the scope of the project and any constraints you need to work within. This includes available resources, data, time, and any technical limitations.

d) Data Availability: Assess the availability of data needed for the project. Is the data accessible, relevant, and of sufficient quality? Data is the cornerstone of any ML project, so its availability and quality are crucial.

e) Success Metrics: Establish the metrics that will be used to measure the success of the model. This could be accuracy, precision, recall, F1-score, etc., depending on the problem type.

Step 2: Data Collection and Preparation

Data is the fuel that powers machine learning models. In this step, the focus shifts towards collecting and preparing the data necessary for building and training the model.

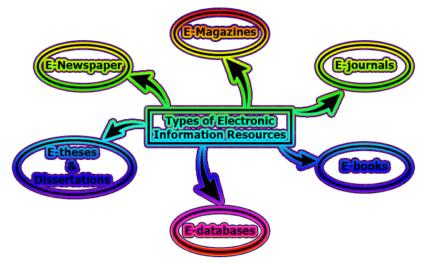

a) Data Collection: Gather relevant data from various sources. This could involve structured data from databases, unstructured data from text or images, or even external data from APIs.

b) Data Cleaning: Clean the collected data to remove any inconsistencies, missing values, duplicates, and outliers. This ensures that the data is of high quality and suitable for analysis.

c) Data Exploration: Perform exploratory data analysis (EDA) to understand the distribution of data, identify patterns, and gain insights. Visualization techniques can aid in this process.

d) Feature Engineering: Transform raw data into meaningful features that the model can understand. This involves selecting, extracting, and transforming relevant attributes from the data.

e) Data Preprocessing: Prepare the data for model training by standardizing, normalizing, and encoding categorical variables. This step helps in improving model performance and convergence.

Step 3: Data Splitting and Model Selection

Before diving into model development, it’s essential to split the data into training, validation, and testing sets. This enables us to train the model on one subset, tune hyperparameters on another, and evaluate its performance on a completely separate subset.

a) Data Splitting: Divide the data into three sets: training, validation, and testing. The typical split ratio might be 70% for training, 15% for validation, and 15% for testing.

b) Model Selection: Choose a set of candidate algorithms or models that are suitable for solving the problem. Consider factors like algorithm complexity, interpretability, and expected performance.

c) Hyperparameter Tuning: Adjust the hyperparameters of the selected models using the validation set. Hyperparameters control the behavior of the algorithm and can significantly impact its performance.

d) Baseline Model: Create a baseline model using default hyperparameters to have a point of reference for evaluating the effectiveness of later improvements.

Step 4: Model Development and Training

This step involves building and training machine learning models using the training data set. The goal is to create a model that learns patterns from the data and generalizes well to make predictions on unseen data.

a) Model Architecture: Choose the architecture of the model based on the problem type. This could involve selecting the number of layers and units for neural networks or the type of algorithm for traditional ML models.

b) Model Building: Construct the model by assembling the chosen architecture. This may involve configuring layers, specifying activation functions, and defining loss functions.

c) Model Training: Train the model using the training data. During training, the model learns to minimize the chosen loss function by adjusting its parameters.

d) Validation and Early Stopping: Monitor the model’s performance on the validation set during training. Apply early stopping to prevent overfitting if the validation performance plateaus or deteriorates.

Step 5: Model Evaluation

Evaluating the model is crucial to determine its performance on unseen data. This step involves assessing how well the model generalizes and whether it meets the defined success metrics.

a) Performance Metrics: Evaluate the model’s performance using appropriate metrics based on the problem type. Common metrics include accuracy, precision, recall, F1-score, mean squared error, etc.

b) Confusion Matrix: For classification problems, create a confusion matrix to visualize the true positive, true negative, false positive, and false negative predictions.

c) Cross-Validation: Perform k-fold cross-validation to assess the model’s stability and robustness. This involves splitting the training data into k subsets and training/validating the model k times.

d) Bias and Fairness Assessment: Check for bias in the model’s predictions and ensure fairness across different groups in the dataset.

Step 6: Model Deployment

Once a satisfactory model is developed and evaluated, it’s time to deploy it for real-world use. Model deployment involves integrating the model into the production environment so it can start making predictions on new data.

a) Deployment Strategy: Decide on the deployment strategy based on the project’s requirements. Options include batch processing, real-time APIs, or embedded systems.

b) Scalability and Performance: Optimize the model for deployment by considering factors like response time, memory usage, and throughput.

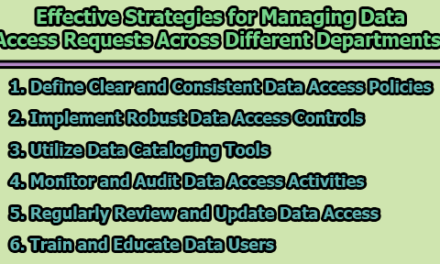

c) Monitoring and Maintenance: Implement monitoring mechanisms to track the model’s performance and health in production. Regularly update and retrain the model to account for changes in the underlying data distribution.

d) A/B Testing: If possible, perform A/B testing by deploying multiple versions of the model and comparing their performance to ensure the new model’s effectiveness.

Step 7: Model Maintenance and Iteration

Machine learning models are not static; they require ongoing maintenance and improvements to remain relevant and effective over time. This final step focuses on continuously improving the model’s performance and adapting it to changing circumstances.

a) Feedback Loop: Collect feedback from users and stakeholders to identify areas of improvement. This feedback can help in refining the model’s predictions and addressing potential shortcomings.

b) Data Updates: Regularly update the model’s training data to account for shifts in the data distribution. Outdated data can lead to degraded performance.

c) Model Retraining: Periodically retrain the model using updated data and improved algorithms. This ensures that the model stays up-to-date and continues to provide accurate predictions.

d) Model Versioning: Maintain a versioning system for models to keep track of changes, updates, and improvements over time.

In conclusion, the machine learning life cycle is a systematic approach that guides the development of machine learning models from problem definition to deployment and beyond. By following these seven steps—Problem Definition and Understanding, Data Collection and Preparation, Data Splitting and Model Selection, Model Development and Training, Model Evaluation, Model Deployment, and Model Maintenance and Iteration—data scientists and practitioners can build robust and effective machine learning solutions that address real-world challenges and deliver value to businesses and users alike. Each step is interconnected, and careful consideration at every stage contributes to the overall success of the machine learning project.

Assistant Teacher at Zinzira Pir Mohammad Pilot School and College