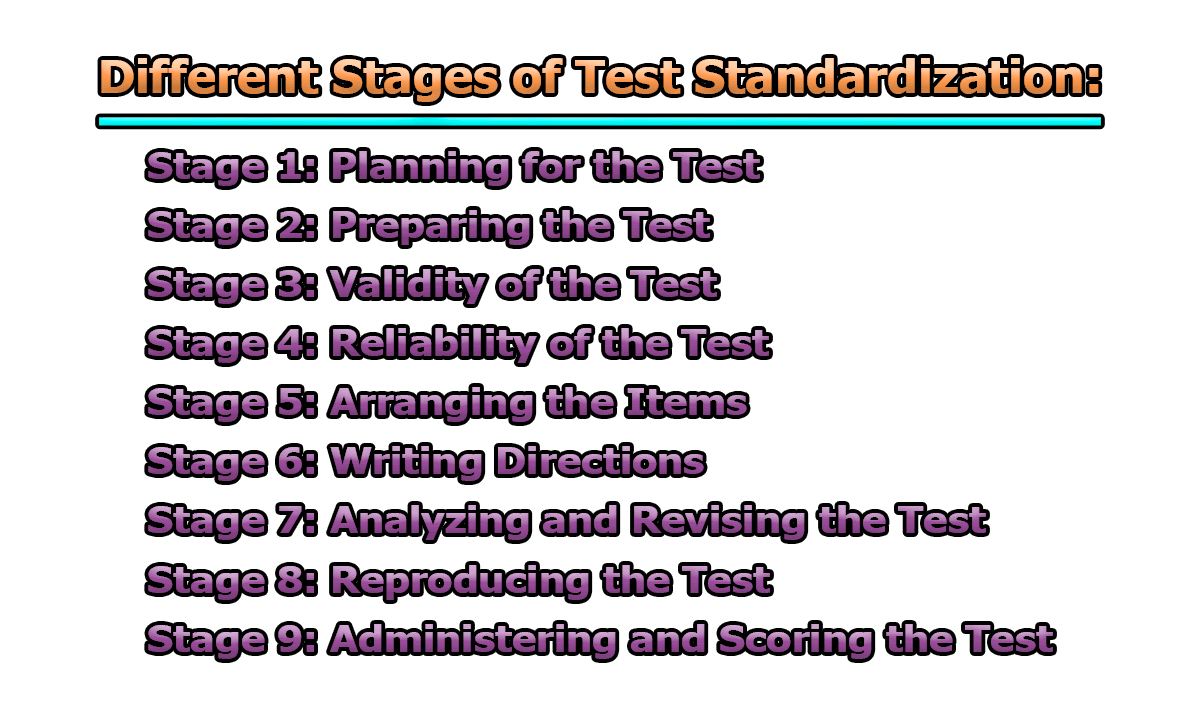

Different Stages of Test Standardization:

In educational measurement and psychological assessment, the process of test standardization is fundamental for ensuring that a test is valid, reliable, and applicable to its intended population. Standardization involves a systematic sequence of stages that guide test developers from conceptualization to administration. Following a structured approach ensures fairness, objectivity, and consistency in testing outcomes. In the rest of this article, we will explore different stages of test standardization.

Stage 1: Planning for the Test:

Planning is the foundation of test standardization. This stage ensures that the test is purpose-driven, relevant to its target group, and organized to measure exactly what it intends to measure (Anastasi & Urbina, 1997).

1.1 Define the Purpose of the Test:

- The first task is to clarify why the test is being developed.

- Is it meant for diagnosis (e.g., identifying learning difficulties), selection (e.g., university entrance exams), placement (e.g., language level), certification (e.g., professional licensing), or achievement measurement (e.g., end-of-course exam)?

Example: The IELTS test is developed to assess English language proficiency for study or immigration purposes.

1.2 Identify the Target Population:

- Clearly define who the test is for: age range, grade level, cultural context, educational background, and language skills.

- This affects vocabulary level, context, test length, and item format.

Example: A spelling test for 2nd graders must use age-appropriate words; a professional licensing exam will use technical terminology suitable for adults in that field.

1.3 Formulate Clear Objectives:

- Translate the test’s purpose into measurable learning outcomes or psychological traits.

- Objectives guide the type of items created and the level of cognitive skills targeted (knowledge, application, analysis, synthesis, etc.).

Example: An objective for a math test might be: Students will accurately solve multi-step algebraic equations.

1.4 Develop a Test Blueprint:

- A detailed test blueprint or table of specifications (TOS) maps content areas to item types and cognitive levels.

- It ensures content coverage is balanced and avoids overemphasis on trivial topics.

Example: A high school biology test blueprint might specify 30% on cell biology, 30% on genetics, and 40% on ecology, with items divided into recall, application, and analysis levels.

Stage 2: Preparing the Test:

This stage involves creating actual test items that reflect the plan. Items must be valid, clear, culturally fair, and suitable for the target group (Kubiszyn & Borich, 2016).

2.1 Develop Test Items:

- Write clear, precise, and unambiguous questions.

- Avoid confusing wording, double negatives, or tricky distractors (wrong answer choices).

Example:

- Poor: Which of these is not unhelpful when studying?

- Improved: Which of these is helpful when studying?

2.2 Select Appropriate Item Formats: Use formats suited to the skills being measured:

- Multiple-choice: Good for broad content sampling and objective scoring.

- True/False: Easy to write but prone to guessing.

- Short answer: Suitable for recall facts.

- Essay: Good for assessing higher-order thinking.

- Performance tasks: Useful for skills that require demonstration.

Example: A driving license test includes multiple-choice questions for rules and a practical driving test for performance.

2.3 Ensure Cultural and Language Fairness:

- Check that items are free from cultural bias or stereotypes.

- Avoid idioms, slang, or references unfamiliar to some test-takers.

Example: Instead of asking “Which baseball team won the World Series in 2020?”, a more universally relevant question could be about global current events or general science.

2.4 Overproduce Items:

- Write more items than needed to allow selection of the best ones.

- Poorly performing items can be discarded after pilot testing.

Example: To finalize a 50-item test, write about 70–80 items to account for possible eliminations.

2.5 Peer Review:

- Have subject matter experts review draft items for accuracy, clarity, and alignment with objectives.

- Revise items based on feedback before pilot testing.

Example: A panel of science teachers reviews a new standardized science test to ensure factual correctness and grade-level appropriateness.

Stage 3: Validity of the Test:

Validity is the most important criterion for any test: it must measure what it is supposed to measure (Cohen & Swerdlik, 2018).

3.1 Content Validity:

- Ensures the test fully represents the domain it claims to cover.

- Experts match test items against the blueprint or curriculum.

Example: A math exam for geometry should include items on angles, triangles, and circles if these are part of the syllabus — not just lines and points.

3.2 Construct Validity:

- Confirms the test measures the intended theoretical concept.

- Uses empirical studies, expert judgment, or statistical methods like factor analysis.

Example: For an emotional intelligence test, items should reflect self-awareness, self-regulation, motivation, empathy, and social skills.

3.3 Criterion-Related Validity: Compares test scores to an external standard or criterion:

- Concurrent Validity: Correlates test results with other established measures taken at the same time.

- Predictive Validity: Determines how well the test predicts future performance.

Example: SAT scores are expected to predict first-year college GPA (predictive validity).

3.4 Pilot Testing and Validation Studies:

- Try out the draft test on a sample similar to the target population.

- Analyze item performance and correlations with external measures.

- Revise the test to improve validity evidence.

Example: A new career aptitude test is administered to 200 high school students, and results are compared with their academic records and counselor assessments.

Stage 4: Reliability of the Test:

Once validity is ensured, it is essential to confirm that the test yields consistent, dependable results every time it is administered under similar conditions (Miller, Linn, & Gronlund, 2013).

4.1 Types of Reliability:

✅ Test-Retest Reliability:

- Measures stability over time.

- The same test is given to the same group twice, with a time gap.

- The correlation between scores indicates reliability.

Example: A reading comprehension test given to students in September and again in October should yield similar results if no learning intervention has occurred.

✅ Parallel-Forms Reliability:

- Two equivalent forms of the test are developed and administered to the same group.

- The correlation between forms shows consistency.

Example: A university English exam might have two forms (Form A and Form B) to prevent cheating — both should yield comparable results.

✅ Internal Consistency Reliability:

- Checks consistency of results within the test itself.

- Measures whether items intended to assess the same concept produce similar scores.

- Methods include split-half reliability and Cronbach’s alpha.

Example: If a test section measures mathematical problem-solving, all related items should align closely in difficulty and expected performance.

✅ Inter-Rater Reliability:

- Important for subjective tests (e.g., essay scoring).

- Ensures consistency between different scorers.

Example: Two teachers marking the same student essay should assign similar grades if clear rubrics are used.

4.2 Features Ensuring Reliability:

✅ Objectivity:

- Scoring must be free from scorer bias.

- Use answer keys, rubrics, or automated scoring.

Example: Multiple-choice tests are highly objective compared to essays.

✅ Comprehensiveness:

- The test must cover the full breadth of intended content.

- Narrow sampling can lower reliability.

Example: A final exam that only tests two chapters out of ten will yield unreliable generalizations.

✅ Simplicity:

- Clear wording and unambiguous questions reduce misunderstanding.

Example: Replace complex phrasing with plain language: “Describe the biogeochemical cycle” instead of “Deliberate upon the multi-phase ecological nutrient transference process.”

✅ Scorability:

- Items should be easy to score consistently.

- Objective items improve scorability.

Example: A spelling test with single-word responses is easier to score than open-ended paragraphs.

✅ Practicality:

- The test should be feasible in terms of time, resources, and cost.

- Overly complex scoring methods may reduce practicality.

Example: National standardized tests use machine-readable answer sheets for practical large-scale scoring.

Stage 5: Arranging the Items:

Once items are finalized for validity and reliability, they must be logically and systematically arranged to optimize test-taker performance (Kubiszyn & Borich, 2016).

5.1 Order of Difficulty:

- Items are typically arranged from easy to hard to build confidence.

Example: A language grammar test might start with simple present tense questions, then move to complex conditional sentences.

5.2 Logical Grouping:

- Group similar item types together to avoid confusion.

Example: All multiple-choice items appear in one section; short answer questions in another.

5.3 Sectioning:

- Long tests are divided into clear sections with headings.

- This helps students navigate and manage time.

Example: A standardized science test might be divided into Biology, Chemistry, and Physics sections.

5.4 Instructions with Each Section:

- Directions must precede each section to guide the test-taker.

Example: “Answer all questions. Circle the correct option. Time: 30 minutes.”

5.5 Pilot Order Testing:

- Sometimes, test developers trial different item orders to find which sequencing reduces fatigue or anxiety.

Example: In an aptitude test, numerical reasoning may be placed before verbal reasoning if research shows this lowers test anxiety.

Stage 6: Writing Directions:

Clear, precise directions are essential to ensure all test-takers understand how to proceed uniformly (Anastasi & Urbina, 1997).

6.1 Clarity:

- Instructions must be simple and direct.

- Avoid complex sentences or ambiguous terms.

Example: “Circle the one best answer for each question.” instead of “Please indicate your preferred response selection.”

6.2 Completeness: Directions should include:

- Purpose of the test/section.

- How to mark answers.

- Time limits.

- Permissible aids (e.g., calculators).

Example: “You have 60 minutes to complete 40 questions. Use a #2 pencil only. Calculators are not allowed.”

6.3 Examples:

- Provide worked examples if the item format is complex.

Example: For matching items: “Example: 1 — C” shows how to match items correctly.

6.4 Consistency:

- Keep wording consistent throughout to avoid confusion.

Example: If you use “tick the correct box” in one section, do not switch to “check the correct square” elsewhere.

6.5 Field Testing Directions:

- Directions should be tested with a small group to ensure they are understood.

- Revise based on feedback.

Example: In pilot studies, confusing instructions can be reworded if students ask too many clarifying questions.

Stage 7: Analyzing and Revising the Test:

After the test is drafted and directions are finalized, it must be piloted and statistically analyzed to ensure that each item performs as expected (Cohen & Swerdlik, 2018). This stage strengthens the test’s validity and reliability through real-world evidence.

7.1 Pilot Testing:

- The draft test is administered to a representative sample of the target population.

- Data collected provides practical insights into item quality.

Example: A district math test is piloted with 100 students in various schools to check whether items match students’ actual knowledge and reading level.

7.2 Item Analysis: Statistical techniques help evaluate individual items for:

- Item Difficulty Index (P): Proportion of students who answered correctly. Ideal range: 30%–80%.

- Discrimination Index (D): Ability of an item to distinguish high performers from low performers. Higher discrimination = better item.

- Distractor Analysis: Checks if incorrect options are plausible — good distractors should attract weaker students.

Example: If 95% of students get an item right, it’s likely too easy. If an item has a negative discrimination index, it may be misleading or ambiguous.

7.3 Revising Items:

- Based on analysis, problematic items are edited, replaced, or removed.

- Items with unclear wording, poor distractors, or low discrimination are prime candidates for revision.

Example: If students misunderstand a question’s phrasing, it can be rewritten for clarity:

- Original: “What is not the main reason for photosynthesis?”

- Revised: “Which of these is NOT a function of photosynthesis?”

7.4 Re-Piloting (If Needed):

- After significant revisions, a second pilot may be conducted to confirm improvements.

Example: A psychological scale with revised items is re-tested to ensure construct validity remains high.

7.5 Finalizing the Item Pool:

- The final set includes only the best-performing, valid, and reliable items.

- The finalized test is then locked in for reproduction.

Stage 8: Reproducing the Test:

With the final version approved, the test must be prepared for large-scale administration in a professional, standardized format (Miller, Linn, & Gronlund, 2013).

8.1 Clear Formatting:

- Ensure the test is visually organized, easy to read, and free from typographical errors.

- Use consistent fonts, spacing, numbering, and margins.

Example: Multiple-choice questions are aligned vertically with clear answer spaces.

- Printing or Digitization: Choose the best method for the context:

- Print: Hard copies for paper-based testing.

- Digital: Online delivery via secure platforms.

Example: Standardized national exams like SATs are still largely paper-based, but many professional certification tests now use secure computer-based systems.

8.3 Standardized Answer Sheets:

- For paper tests, prepare bubble sheets compatible with optical mark recognition (OMR) scanners.

- This reduces human error and speeds up scoring.

Example: Multiple-choice university entrance exams often use OMR sheets to handle thousands of test-takers efficiently.

8.4 Quality Control:

- Proofread final copies multiple times.

- Test digital platforms for glitches.

- Pilot a sample print run to check clarity and accuracy.

Example: Exam boards like ETS run multiple checks to catch misprints that could confuse thousands of candidates.

Stage 9: Administering and Scoring the Test:

The final stage involves delivering the test to students and scoring responses accurately under consistent conditions (Kubiszyn & Borich, 2016).

9.1 Standardized Administration:

- Ensure that all test-takers get the same instructions, environment, time limits, and materials.

- Reduce variations that might affect performance.

Example: For a standardized language exam, all centers start at the same time, under proctored supervision.

9.2 Security Measures:

- Prevent cheating or leakage by securing test papers and digital systems.

- Use trained invigilators.

Example: High-stakes tests like TOEFL have strict rules: no phones, ID checks, and video monitoring.

9.3 Scoring Procedures:

- Use objective scoring keys for closed-ended items.

- For essays or performance tasks, use detailed rubrics and trained scorers to ensure inter-rater reliability.

Example: IELTS speaking and writing tasks are double-rated by trained examiners.

9.4 Score Reporting:

- Convert raw scores to scaled scores if needed.

- Provide clear, understandable score reports for students and stakeholders.

Example: GRE results include section scores and percentiles to compare individual performance to peers.

9.5 Data Archiving:

- Maintain secure records for future research, auditing, or appeals.

- Data helps in future test revisions and policy planning.

Example: National exam boards archive test responses for several years.

In conclusion, the standardization of a test is a meticulous, multi-stage process that ensures assessments are valid, reliable, and fair. By systematically planning, developing, validating, arranging, and administering tests, educators and psychologists can make informed decisions that positively impact learning and psychological evaluation. The rigorous process enhances the credibility of the test and ensures that its results are meaningful and actionable.

Frequently Asked Questions (FAQs):

What does test standardization mean in simple terms?

Test standardization is the careful process of creating, organizing, and using a test so that everyone who takes it has the same experience. This means the test is planned, developed, and given to people in the same way, with the same instructions, time limits, and scoring rules. The goal is to make sure the test results are fair, trustworthy, and can be compared from one person or group to another.

Why is standardization important for schools and organizations?

Standardization makes sure the test is fair to everyone, no matter where they take it or who grades it. Without standardization, some students might get harder questions or clearer instructions than others, which would make the scores unfair and misleading. When a test is standardized, teachers, employers, and decision-makers can trust that the scores mean the same thing for all test-takers.

How does standardization help students do better?

A well-standardized test gives clear instructions and well-planned questions that match what students have learned. It avoids confusing wording or unfair questions that could trick students. By giving all students the same fair chance, standardization reduces test anxiety and helps students show what they really know and can do.

What is the difference between validity and reliability in testing?

These two words are very important in testing:

- Validity means the test measures what it is supposed to measure. For example, a math test should measure math skills, not reading ability.

- Reliability means the test gives the same or similar results if it is repeated with the same person or group under the same conditions. A reliable test is like a good clock — it tells the same time every time.

A test must be both valid and reliable to be useful.

What is a pilot test and why is it important?

A pilot test is like a practice run. Before giving the final version of a test to everyone, a small group of people take it first. This helps the test developers find out if any questions are too easy, too hard, confusing, or biased. If problems are found, they can fix or replace bad questions before the real test is given. This step helps make the test more accurate and fair.

How do test makers make sure tests are fair for different groups of people?

Test developers check every question carefully to make sure it does not unfairly favor or hurt any group. They avoid language or examples that some people might not understand because of their culture, background, or experiences. They also test the draft with people from different backgrounds and make changes if any group finds the test harder to understand than others.

What problems happen if a test is unreliable?

If a test is unreliable, the scores will change every time someone takes it — even if nothing about their knowledge or ability has changed. This makes the results meaningless. For example, if a person takes a job skills test twice in one week and gets very different scores, the test cannot be trusted to measure their true skills.

What does “arranging items” mean when designing a test?

Once questions are written, test developers must decide the best order. Usually, tests start with easier questions to help people build confidence and reduce nervousness. Harder questions come later. Similar types of questions (like multiple-choice, true/false, or short answer) are grouped together so test-takers don’t get confused switching formats. This arrangement makes the test clear and less stressful.

Who uses standardized tests and why?

Standardized tests are used by:

- Schools: To check if students have learned what they were taught.

- Colleges and universities: To select students for admission.

- Employers: To check job skills or professional knowledge.

- Licensing boards: To give certifications for jobs like teaching, nursing, or driving.

- Psychologists: To help understand a person’s abilities, personality, or needs.

All these groups use standardized tests because they need results that are fair and trustworthy.

Can tests be standardized when given online?

Yes! Many modern tests are now given online instead of on paper. Standardization still matters. Online tests must have the same clear instructions, time limits, question order, and scoring rules for everyone. Extra security steps — like secure logins and proctors — help keep online testing fair and prevent cheating.

References:

- Anastasi, A., & Urbina, S. (1997). Psychological testing (7th ed.). Prentice Hall.

- Cohen, R. J., & Swerdlik, M. E. (2018). Psychological testing and assessment: An introduction to tests and measurement (9th ed.). McGraw-Hill Education.

- Kubiszyn, T., & Borich, G. (2016). Educational testing and measurement: Classroom application and practice (11th ed.). Wiley.

- Miller, M. D., Linn, R. L., & Gronlund, N. E. (2013). Measurement and assessment in teaching (11th ed.). Pearson.

Library Lecturer at Nurul Amin Degree College