Unsupervised Machine Learning:

Unsupervised learning is a type of machine learning in which models are trained using unlabeled datasets and are allowed to act on that data without any supervision. Unsupervised learning is a machine learning approach where models learn from unlabeled data to discover hidden patterns and structures. Unlike supervised learning, there’s no predefined output for the algorithm to learn from. Instead, the algorithm autonomously identifies relationships within the data. For example: Imagine an unsupervised learning algorithm analyzing customer shopping behavior. Given a dataset of customer purchases without specific labels, the algorithm autonomously groups customers based on their buying habits. It identifies clusters of similar behaviors, revealing insights like customer segments, popular products, or shopping preferences. This process helps businesses tailor marketing strategies and improve customer experiences. In the rest of this article, we are going to know about unsupervised machine learning; including how it works, types, advantages, and disadvantages.

Why Use Unsupervised Learning?

Unsupervised learning is a category of machine learning where the algorithm is trained on data without explicit supervision or labeled output. Instead, it aims to discover the underlying structure or patterns within the data. There are several reasons to use unsupervised learning:

1. Exploratory Data Analysis: Unsupervised learning can be a valuable tool for exploring and understanding your data. It helps you identify hidden patterns, correlations, and outliers in your dataset, which can inform subsequent data analysis and decision-making.

2. Clustering: Unsupervised learning algorithms can group similar data points together into clusters. This is useful for tasks like customer segmentation, image segmentation, or any scenario where you want to find natural groupings within your data.

3. Dimensionality Reduction: Unsupervised learning techniques like Principal Component Analysis (PCA) or t-SNE can reduce the dimensionality of your data while preserving its important characteristics. This is helpful for visualizing high-dimensional data and speeding up subsequent supervised learning tasks.

4. Anomaly Detection: Unsupervised learning can be used to detect anomalies or outliers in your data. This is crucial in applications like fraud detection, network security, and quality control, where identifying unusual patterns is essential.

5. Feature Engineering: Unsupervised learning can generate new features or representations of your data, which can be used as input for supervised learning models. For example, word embeddings learned from unsupervised techniques like Word2Vec or GloVe can improve the performance of natural language processing tasks.

6. Generative Modeling: Unsupervised learning is also used to build generative models like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs). These models can generate new data samples that resemble the training data, which has applications in image generation, data augmentation, and more.

7. Preprocessing: Unsupervised learning can help preprocess and clean data. Techniques like imputation, normalization, and outlier removal can improve the quality of data before applying supervised learning algorithms.

8. Reducing Labeling Costs: In some cases, labeling data for supervised learning can be expensive or time-consuming. Unsupervised learning can provide insights that reduce the amount of labeled data required, making the overall process more efficient.

9. Anonymizing Data: In privacy-sensitive applications, unsupervised learning can be used to extract meaningful information from data while preserving individual privacy by removing personally identifiable information.

So, unsupervised learning is a versatile and valuable tool in machine learning, particularly when you want to gain insights from data, extract meaningful features, or solve problems where labeled data is scarce or expensive to obtain.

Working of Unsupervised Learning:

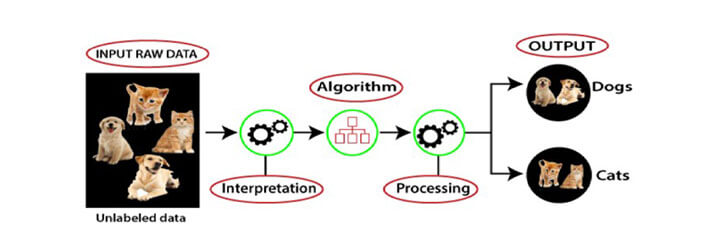

Working of unsupervised learning can be understood by the below diagram:

Here, we have taken unlabeled input data, which means it is not categorized and corresponding outputs are also not given. Now, this unlabeled input data is fed to the machine learning model in order to train it. Firstly, it will interpret the raw data to find the hidden patterns from the data and then will apply suitable algorithms such as k-means clustering, Decision tree, etc.

Once it applies the suitable algorithm, the algorithm divides the data objects into groups according to the similarities and differences between the objects.

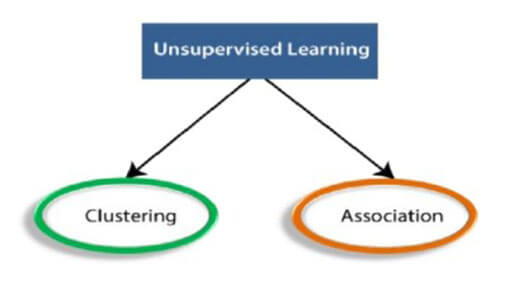

Types of Unsupervised Learning Algorithm:

The unsupervised learning algorithm can be further categorized into two types of problems:

1. Clustering: Clustering is a method of grouping objects into clusters such that objects with the most similarities remain in a group and have less or no similarities with the objects of another group. Cluster analysis finds the commonalities between the data objects and categorizes them as per the presence and absence of those commonalities.

a) K-Means: K-Means is a centroid-based clustering algorithm. It starts with K initial cluster centroids (centers) and assigns each data point to the nearest centroid. After the assignment, the centroids are updated to the mean of the points in their respective clusters. This process iterates until convergence. K-Means is widely used in various applications, including image compression, customer segmentation, and document clustering.

b) Hierarchical Clustering: Hierarchical clustering builds a hierarchical representation of clusters using either an agglomerative (bottom-up) or divisive (top-down) approach. It creates a dendrogram, which is a tree-like structure showing the merging or splitting of clusters at each level. By cutting the dendrogram at a certain height, you can obtain clusters of different sizes.

c) DBSCAN (Density-Based Spatial Clustering of Applications with Noise): DBSCAN identifies clusters based on the density of data points. It defines core points, which have a minimum number of neighbors within a specified distance, and forms clusters around them. Data points that are not part of any cluster are considered noise. DBSCAN can find clusters of arbitrary shapes and is robust to outliers.

d) Gaussian Mixture Models (GMM): GMM assumes that data points are generated from a mixture of Gaussian distributions. It uses the Expectation-Maximization (EM) algorithm to estimate the parameters of these distributions, such as means and covariances. GMM can be used for clustering but is also valuable for modeling the underlying probability distribution of data.

e) Agglomerative Clustering: This hierarchical clustering method starts with each data point as its own cluster and iteratively merges the closest clusters based on a chosen linkage criterion (e.g., single linkage, complete linkage, average linkage). The process continues until a specified number of clusters or a distance threshold is reached.

2. Association: An association rule is an unsupervised learning method which is used for finding the relationships between variables in the large database. It determines the set of items that occurs together in the dataset. Association rule makes marketing strategy more effective. Such as people who buy X item (suppose a bread) are also tend to purchase Y (Butter/Jam) item. A typical example of Association rule is Market Basket Analysis.

a) Apriori Algorithm: The Apriori algorithm is a classic association rule mining technique. It operates in two main steps: (a) finding frequent itemsets by iteratively pruning infrequent itemsets and (b) generating association rules from the frequent itemsets. It’s commonly used for market basket analysis, where the goal is to discover patterns like “customers who buy bread are likely to buy butter.”

b) FP-Growth (Frequent Pattern Growth): FP-Growth is an efficient algorithm for mining frequent item sets. It constructs an FP-tree, a compact data structure, to represent the dataset. This structure facilitates the discovery of frequent patterns without generating candidate itemsets, making it faster than Apriori for large datasets.

c) Eclat (Equivalence Class Transformation): Eclat is another algorithm for mining frequent item sets. It employs a depth-first search approach and uses equivalence classes to prune the search space efficiently. Eclat is especially useful when dealing with sparse datasets.

d) PrefixSpan: PrefixSpan is designed for sequential pattern mining. It finds frequent sequences of items in datasets where the order of items matters, such as analyzing user behavior in weblogs or DNA sequence analysis.

e) CAR (Classification Association Rules): CAR mining combines association rule mining with classification. It aims to discover association rules that also have high classification accuracy when used as features in a machine-learning model. This approach can help identify valuable patterns for predictive modeling.

f) SPADE (Sequential Pattern Discovery using Equivalence classes): SPADE focuses on mining sequential patterns in transactional databases. It uses an efficient depth-first search strategy and employs equivalence classes to reduce the computational complexity.

Advantages and Disadvantages of Unsupervised Learning:

Unsupervised learning offers several advantages and disadvantages, and these factors should be considered when deciding whether to use unsupervised learning for a particular problem. Here are some of the key advantages and disadvantages of unsupervised learning:

Advantages of Unsupervised Learning:

- Discovery of Hidden Patterns: Unsupervised learning algorithms can uncover hidden patterns and structures within data that may not be apparent through manual inspection. This can lead to valuable insights and a deeper understanding of the underlying data.

- Data Exploration: Unsupervised learning is a valuable tool for exploratory data analysis. It allows you to visualize and summarize complex datasets, helping you identify trends, outliers, and potential areas of interest.

- Dimensionality Reduction: Techniques like Principal Component Analysis (PCA) and t-SNE can reduce the dimensionality of high-dimensional data while preserving important information. This can lead to more efficient modeling and visualization.

- Clustering: Unsupervised learning can be used for clustering, which is the process of grouping similar data points together. Clustering can aid in tasks like customer segmentation, image segmentation, and anomaly detection.

- Feature Engineering: Unsupervised learning can generate new features or representations of data that can be used in subsequent supervised learning tasks. For example, word embeddings learned through unsupervised techniques can improve natural language processing models.

- Anomaly Detection: Unsupervised learning can be used to detect unusual patterns or outliers in data, which is important for applications like fraud detection and quality control.

- Reduced Labeling Costs: In some cases, labeling data for supervised learning can be expensive or time-consuming. Unsupervised learning can help reduce the need for labeled data by generating insights or features that enhance the performance of supervised models.

Disadvantages of Unsupervised Learning:

- Lack of Ground Truth: One of the main disadvantages of unsupervised learning is the absence of a ground truth or labeled data to evaluate the quality of results. This makes it challenging to assess the accuracy of clustering or pattern discovery.

- Subjectivity: Unsupervised learning results can be subjective and depend on the choice of algorithm, hyperparameters, and preprocessing steps. Different approaches may lead to different outcomes, and there is no one “correct” solution.

- Interpretability: Some unsupervised learning models, especially deep learning models, can be difficult to interpret. Understanding why a model made a particular decision or identified certain patterns can be challenging.

- Computationally Intensive: Some unsupervised learning algorithms can be computationally intensive, especially when dealing with large datasets or high-dimensional data. This can require significant computational resources.

- Curse of Dimensionality: High-dimensional data can pose challenges for unsupervised learning, as the distance metrics used in many algorithms become less effective in high-dimensional spaces. Dimensionality reduction techniques are often needed to address this issue.

- Difficulty in Evaluation: Evaluating the quality of clustering or pattern discovery can be challenging, as there may be no clear ground truth to compare against. Evaluation metrics for unsupervised learning are often application-specific.

In conclusion, unsupervised machine learning stands as a versatile and indispensable facet of the broader field of artificial intelligence. It empowers us to glean insights from data without the need for explicit labels, illuminating hidden patterns, clustering data points, and providing a foundation for further analysis. While its capacity for data exploration, dimensionality reduction, and feature generation is invaluable, it is not without its challenges, such as the absence of a ground truth for evaluation and the subjective nature of results. Nevertheless, as we continue to grapple with ever-expanding datasets and intricate data structures, the utility of unsupervised learning in unraveling complexity and making sense of unstructured information becomes increasingly evident, cementing its role as an essential tool in the data scientist’s toolkit.

Library Lecturer at Nurul Amin Degree College