Validity and Reliability in Research: Ensuring Robust Findings through Methodological Rigor

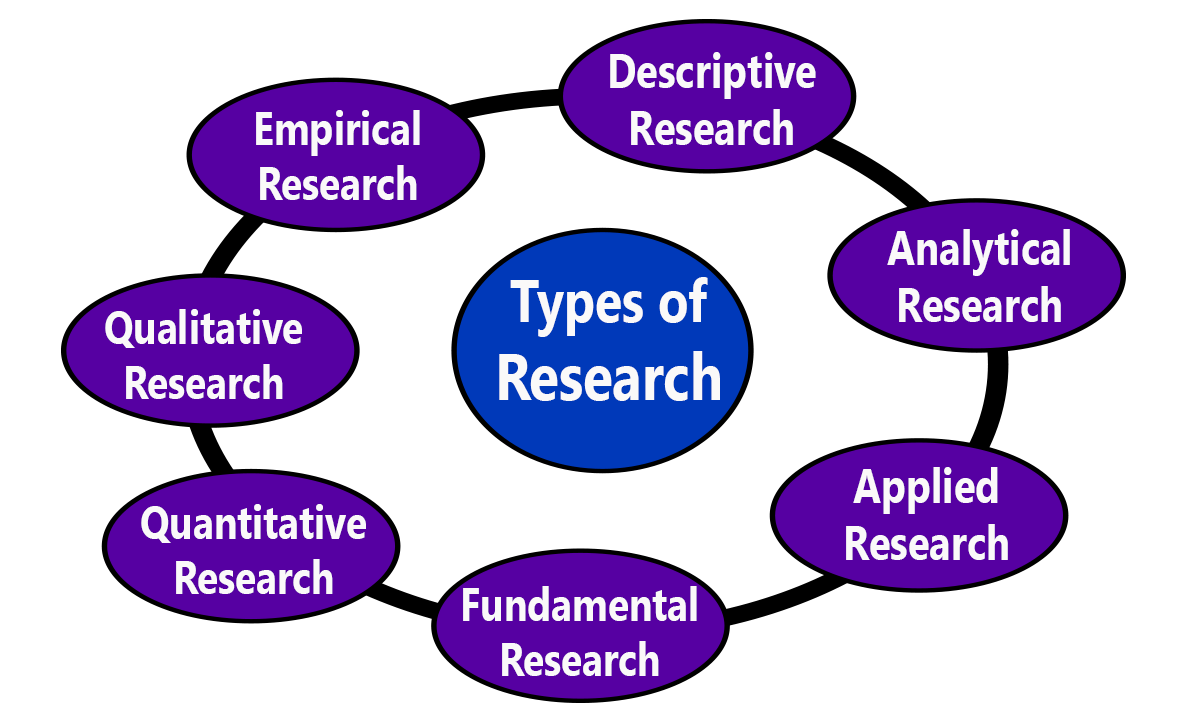

Research is a systematic inquiry aimed at acquiring new knowledge or advancing existing knowledge in a particular field or discipline (Leedy & Ormrod, 2021). Central to the research process is the need for accurate and dependable findings, as these findings form the basis for theory development, policy formulation, and practical applications. Achieving this level of rigor in research necessitates the consideration of two fundamental concepts: validity and reliability. Validity refers to the extent to which a research study measures what it intends to measure (Trochim & Donnelly, 2008). In other words, a valid research instrument accurately reflects the concept or construct it is designed to assess. Reliability, on the other hand, refers to the consistency and stability of research measurements (Carmines & Zeller, 1979). In a reliable study, if the same measurements were taken under the same conditions, the results would be highly consistent or reproducible. In this article, we will explore these essential concepts of validity and reliability in research: ensuring robust findings through methodological rigor.

1. Validity in Research:

1.1. Definition of Validity: Validity is a multifaceted concept that encompasses various dimensions, each evaluating different aspects of a research study’s accuracy and authenticity. To ensure that research findings accurately represent the intended constructs, researchers must establish the validity of their measures (Trochim & Donnelly, 2008). According to the APA style guidelines, there are several types of validity, each addressing a different facet of the research process.

1.2. Types of Validity: Validity is a fundamental concept in research methodology, and there are several types of validity that researchers consider when assessing the accuracy and appropriateness of their research measures. Each type of validity focuses on different aspects of the measurement process and helps ensure that the research accurately reflects the construct or phenomenon under investigation. Here are the main types of validity:

1.2.1. Content Validity: Content validity refers to the degree to which the items or questions in a research instrument adequately represent the full range of the construct being measured (Carmines & Zeller, 1979). In other words, it assesses whether the items comprehensively cover the concept under investigation. Ensuring content validity is crucial as it ensures that the instrument measures the construct in a representative and unbiased manner.

For instance, if a researcher is developing a questionnaire to assess the quality of life in cancer patients, content validity would entail including items that encompass various aspects of quality of life, such as physical health, emotional well-being, social support, and financial stability. Failing to include items that cover these dimensions would result in a lack of content validity.

1.2.2. Criterion Validity: Criterion validity assesses the extent to which a research instrument or measure predicts a specific criterion or outcome accurately (Trochim & Donnelly, 2008). There are two subtypes of criterion validity: concurrent validity and predictive validity.

- Concurrent Validity: Concurrent validity evaluates how well a research measure aligns with an established criterion measured at the same time (Carmines & Zeller, 1979). For example, if a new intelligence test correlates highly with an established intelligence test when administered to the same group of participants, it demonstrates concurrent validity.

- Predictive Validity: Predictive validity, on the other hand, assesses how well a research measure predicts a future criterion or outcome (Trochim & Donnelly, 2008). For instance, if a college admissions test can accurately predict a student’s academic performance during their first year of college, it demonstrates predictive validity.

1.2.3. Construct Validity: Construct validity is the most complex and abstract type of validity, focusing on the underlying theoretical concepts or constructs being measured (Carmines & Zeller, 1979). It examines whether a research instrument accurately captures the theoretical construct it is intended to assess. Establishing construct validity involves demonstrating that the instrument behaves as expected based on existing theories and empirical evidence.

Researchers typically use multiple methods to establish construct validity, such as convergent and discriminant validity:

- Convergent Validity: Convergent validity examines the degree to which different measures that are theoretically related to the same construct yield similar results (Trochim & Donnelly, 2008). For instance, in a study measuring self-esteem, researchers would expect high positive correlations between their self-esteem questionnaire and other established self-esteem measures.

- Discriminant Validity: Discriminant validity assesses the extent to which a measure is distinct from other constructs or measures that it should not be related to (Carmines & Zeller, 1979). In the self-esteem example, researchers would expect low or non-significant correlations between their self-esteem questionnaire and measures of unrelated constructs, such as intelligence.

1.3. Importance of Validity: Establishing validity is crucial in research for several reasons; some of them are following:

- Accurate Measurement: Validity ensures that research instruments accurately measure the intended constructs, allowing researchers to draw meaningful conclusions based on their data (Trochim & Donnelly, 2008).

- Theoretical Foundation: Construct validity, in particular, provides a strong theoretical foundation for research by demonstrating that the measurement aligns with existing theories and concepts (Carmines & Zeller, 1979).

- Application and Generalizability: Valid research findings are more likely to be applicable to real-world situations and generalizable to broader populations (American Psychological Association, 2019).

Without validity, research findings lack credibility and may lead to erroneous conclusions. Hence, researchers must take rigorous steps to establish and report the validity of their measures.

2. Reliability in Research:

2.1. Definition of Reliability: Reliability, like validity, is a critical aspect of research methodology that ensures the consistency and stability of research measurements (Carmines & Zeller, 1979). A reliable measure should yield consistent results when administered under similar conditions. In other words, if the same measurements were taken repeatedly, the results should be highly reproducible.

2.2. Types of Reliability: There are various types of reliability that researchers should consider; mentionable some are being:

2.2.1. Test-Retest Reliability: Test-retest reliability assesses the stability of a measurement over time (Carmines & Zeller, 1979). To establish test-retest reliability, researchers administer the same measure to the same group of participants on two separate occasions, with a reasonable time gap between the administrations. High correlations between the two sets of scores indicate strong test-retest reliability.

For example, if a personality questionnaire is administered to a group of individuals, and their scores remain relatively stable when the same questionnaire is readministered two weeks later, it demonstrates good test-retest reliability.

2.2.2. Split-Half Reliability: Split-half reliability assesses the internal consistency of measurement by dividing it into two halves and comparing the results (Trochim & Donnelly, 2008). This method is typically used for questionnaires with multiple items. Researchers compute the correlation between the scores on one half of the items with the scores on the other half. High correlations indicate strong split-half reliability.

For instance, if a researcher is using a 20-item depression scale, they could randomly split it into two sets of 10 items each. Then, they would compute the correlation between the scores obtained from the first 10 items and the scores from the second 10 items.

2.2.3. Inter-Rater Reliability: Inter-rater reliability, also known as inter-observer reliability, assesses the consistency of measurements when different raters or observers are involved (American Psychological Association, 2019). It is commonly used in observational research or when human judgment is required in data collection. High inter-rater reliability indicates that different raters or observers are making consistent judgments or measurements.

For example, in a study where multiple raters assess the behavior of children in a classroom, high inter-rater reliability would mean that the different raters consistently agree on the behavior ratings they assign to each child.

2.2.4. Internal Consistency Reliability: Internal consistency reliability evaluates the degree to which items within a research instrument measure the same underlying construct (Carmines & Zeller, 1979). Cronbach’s alpha coefficient is a commonly used statistic to assess internal consistency reliability. It ranges from 0 to 1, with higher values indicating greater internal consistency.

For example, in a satisfaction survey, if all the items related to “customer service” consistently yield high ratings, it suggests good internal consistency reliability for the customer service dimension of the survey.

2.3. Importance of Reliability: Reliability is a crucial element in research for several reasons. Here are some of them:

- Reproducibility: A reliable measure ensures that research findings can be reproduced by other researchers or in subsequent studies (Trochim & Donnelly, 2008).

- Minimizing Error: Reliability helps minimize measurement error, which can distort research results and lead to incorrect conclusions (Carmines & Zeller, 1979).

- Trustworthiness: Reliable measures increase the trustworthiness of research findings, enhancing their credibility and validity (American Psychological Association, 2019).

In essence, without reliability, researchers cannot have confidence in the consistency of their measurements, making it challenging to draw meaningful and dependable conclusions from their data.

3. Ensuring Validity and Reliability:

3.1. Challenges in Ensuring Validity and Reliability: While achieving validity and reliability is crucial in research, it is not always straightforward. Researchers often encounter several challenges; some of them are being:

3.1.1. Measurement Error: Measurement error refers to the variability or inaccuracy introduced by the measurement process itself (Carmines & Zeller, 1979). Factors such as poorly designed instruments, ambiguous questions, or inconsistent administration can contribute to measurement error. To mitigate this challenge, researchers must carefully design and pretest their instruments to minimize measurement error.

3.1.2. Response Bias: Response bias occurs when participants systematically provide inaccurate responses, often due to social desirability or the desire to conform to perceived expectations (American Psychological Association, 2019). Researchers can address response bias by ensuring anonymity, using validated measures, and employing techniques to elicit more honest responses.

3.1.3. Sample Bias: Sample bias occurs when the study sample is not representative of the population under investigation (Trochim & Donnelly, 2008). Researchers must use appropriate sampling methods to minimize sample bias and ensure that the findings can be generalized to the broader population.

3.1.4. Instrument Design: Poorly designed research instruments can lead to validity and reliability issues (Carmines & Zeller, 1979). Researchers should carefully construct their measures, pilot test them, and make necessary revisions to improve instrument design.

3.2. Strategies to Enhance Validity and Reliability: To overcome these challenges and enhance validity and reliability, researchers can employ the following strategies:

3.2.1. Pilot Testing: Pilot testing involves administering the research instrument to a small sample of participants to identify and rectify any issues with clarity, wording, or item redundancy (American Psychological Association, 2019). Feedback from pilot testing helps improve the instrument’s validity and reliability.

3.2.2. Standardization: Standardizing the research process involves establishing uniform procedures for data collection, administration, and scoring (Trochim & Donnelly, 2008). By standardizing these processes, researchers minimize potential sources of error and increase the likelihood of obtaining valid and reliable results.

3.2.3. Use of Established Measures: When possible, researchers should use established and validated measures rather than creating new instruments (Carmines & Zeller, 1979). Established measures have undergone rigorous testing for validity and reliability, increasing confidence in their results.

3.2.4. Randomization and Counterbalancing: In experimental research, randomization and counterbalancing can enhance the internal validity of a study (American Psychological Association, 2019). Randomization ensures that each participant has an equal chance of being assigned to any condition while counterbalancing controls for order effects in repeated-measures designs.

3.2.5. Triangulation: Triangulation involves using multiple methods or sources of data to corroborate research findings (Trochim & Donnelly, 2008). By triangulating data, researchers can strengthen the validity of their results through convergence of evidence.

3.3. Reporting Validity and Reliability: In accordance with APA style guidelines, researchers should transparently report the steps taken to establish validity and reliability in their research studies. This includes providing detailed descriptions of the measures used, any pilot testing or instrument development processes, and statistical analyses conducted to assess validity and reliability.

Additionally, researchers should report the specific validity and reliability coefficients or statistics, such as Cronbach’s alpha for internal consistency reliability or correlation coefficients for criterion validity (American Psychological Association, 2019). Transparent reporting ensures that readers and fellow researchers can evaluate the credibility of the study’s findings.

In conclusion, validity and reliability are foundational concepts in research methodology, serving as essential criteria for evaluating the quality and credibility of research findings. Validity ensures that research instruments accurately measure the intended constructs, while reliability guarantees the consistency and stability of research measurements. Researchers face various challenges in achieving validity and reliability, including measurement error, response bias, sample bias, and instrument design issues.

To enhance validity and reliability, researchers can employ strategies such as pilot testing, standardization, using established measures, randomization, counterbalancing, and triangulation. Transparent reporting of the steps taken to establish validity and reliability is also crucial to allow for the evaluation of research findings by the scientific community.

So, validity and reliability are not merely technical aspects of research; they are fundamental to the trustworthiness and credibility of research findings. Researchers must rigorously address these criteria to ensure that their studies contribute to the advancement of knowledge in their respective fields and have a meaningful impact on theory, practice, and policy.

References:

- American Psychological Association. (2019). Publication manual of the American Psychological Association (7th ed.). https://doi.org/10.1037/0000165-000

- Carmines, E. G., & Zeller, R. A. (1979). Reliability and Validity Assessment. SAGE Publications.

- Leedy, P. D., & Ormrod, J. E. (2021). Practical Research: Planning and Design (12th ed.). Pearson.

- Trochim, W. M. K., & Donnelly, J. P. (2008). The Research Methods Knowledge Base (3rd ed.). Atomic Dog.

Former Student at Rajshahi University