Webometrics:

Studies in Library and Information Science (LIS) used to focus on static-tangible information resources for a long time since the invention of writing in the third millennium BC or possibly earlier by the Sumerians. However, the situation has changed dramatically into the study of dynamic intangible resources by the mid-1990s when efforts have been made to investigate the nature and metrics of the web through applying techniques and methodologies of bibliometrics and informetrics in order to deal with the continuous flow of web-based information resources. In the rest of this article, we are going to know about the objectives, importance, Scope, and areas of webometrics.

Definition of Webometrics: In order to understand the nature of the term “Webometrics‟, it is better to shed light on its definitions and associated terms as follows:

“Webometrics is the study of the quantitative aspects of the construction and use of information resources, structures and technologies on the web, drawing on bibliometric and informetric approaches” (Bjorneborn & Ingwersen 2004, op. cit.)

Thelwall added another definition based on content as follows: “the study of web-based content with primarily quantitative methods for social science research goals using techniques that are not specific to one field of study” (Thelwall 2009)

So, it can be said that webometrics is the application of informetric methods to the web. This new emerging idea of carrying out the same types of informetric analyses on the 11 web is possible via a citation database. It is obvious that informetric methods using word counts can be applied on the web. But what is new is to regard the web as a dynamic citation network where the traditional information entities and citations are replaced by web pages with hyperlinks acting rather like citations

The objectives: Webometrics, the quantitative study of web-related phenomena, emerged from the realization that methods originally designed for bibliometric analysis of scientific journal article citation patterns could be applied to the web, with commercial search engines providing the raw data. The philosophy of webometrics aims to quantify the information-seeking (web search) behavior on the web. The clearest need for webometrics is to support research into web phenomena

The importance: Its applications in measuring online intellectual production in science, research, and development, and patents for industrial assessment, among others, is crucial in reinforcing policies and strategies of industry and higher education institutions.

Scope of webometrics:

The webometrics discipline is concerned with measuring web-based phenomena such as websites, web pages, parts of web pages, election websites, academic websites, blogs, social networking, words in web pages, hyperlinks, web search engine results, and national web domains. Webometrics include a range of recent developments, such as patent analysis, national research evaluation exercises visualization techniques, new applications, online citation indexes, and the creation of digital libraries (Thelwall 2008, op. cit.). Nowadays, webometrics research has expanded from general or academic web analyses to investigations of social websites of blogs, RSS feeds, and study aspects of social networks such as Facebook, YouTube, and Twitter.

The most powerful webometric method is the web impact factor (WIF) which is proposed in 1998 by Peter Ingwersen. He proposed that a calculation of WIF as a quantitative measure can give an indication of the relative attractiveness of countries or research sites on the web at a given point in time. The WIF’S calculation is based on the same concepts as already employed for journal impact factor (JIF) calculation.

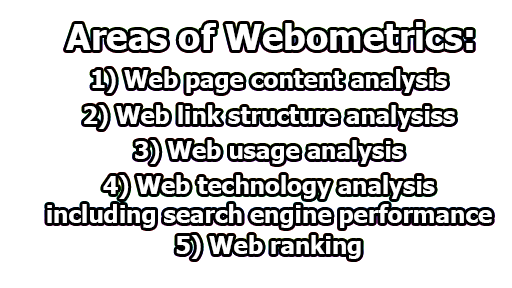

Areas of webometrics:

The webometrics definition stated earlier implies quantitative aspects of both the construction and usage aspects of the web providing five main areas of current webometric research which are described as follows:

1) Web page content analysis: OCLC provides two different definitions for the term “website‟. One of these definitions rely strictly on physical (network infrastructure) criteria, and the other on information (content-oriented) criteria:

“Web site (Physical Definition): the set of web pages located at one Internet Provider address.” “Web site (Information Definition): a set of related web pages that, in the aggregate, form a composite object of international relevance” (OCLC 1999).

Quality of content published on the web is often criticized for being unreliable and lack credibility to be utilized for decision-making, education, and research and development. This is because there are no quality assurance or governance policies in web publishing.

The website may contain surface content or deep web content such as databases of open or restricted content. In the extreme, the “linked” nature of the web opens the door for an argument that the web itself is one grand website. There are two kinds of the web:

- Surface web: It is mostly inappropriate for educational or academic purposes and some of it may even be incorrect or biased. Consequently, excessive reliance on the “surface web‟ may generate superficial research habits, endanger the value of academic information, and adversely affect the quality of research and academic publications

- Invisible web: The information available on the web is still only partial and incredible. Search engines index only a small amount of the information available on the web and most users do not access the information existing on the “invisible web‟ This web contains high-quality information.

2) Web link structure analysis: Henceforth web documents are known as hypertext with links to further associated documents, on the model of references in a scientific paper or cross-references in indexing. With digital documents, these cross-references or nodes can be followed by a mouse-click. Entry of the website usually starts at the homepage, which is roughly equivalent to the title page in the print environment. The homepage often provides information about the site, and may also function as a table of contents. Following the homepage, the most essential bibliographic unit on the web is the web page (static or interactive HTML file).

3) Web usage analysis: The broadest interpretation of the web is a collection of HTTP servers operating on TCP/IP interconnected networks. Its narrower interpretation, however, consists of all active HTTP servers that receive, understand, and process client requests. Its accessibility can be determined from the response code returned to the client who attempts to get connected.

4) Web technology analysis: Finding information on the Internet seemed somewhat like trying to find a needle in a „haystack‟. An added dimension to the „haystack‟ metaphor is that the Internet environment is a dynamic collection of networks. Archie, Veronica, Gopher, Wide Area Information System (WAIS), Mosaic, AltaVista, HotBot, NorthernLight, Excite, Lycosse, Inforseek, and others were the early search tools of the Internet- the first attempts to provide more order and searchability. Although these early tools are still accessible today, they were developed prior to web browsers and have generally been replaced by more popular web search tools such as Yahoo and Google (Valauskas, 1994; Bjorneborn & Ingwersen, 2001).

5) Web Ranking: A webometrics indicator has been launched in Spain for the purposes of ranking scientific repositories and worldwide universities. Repository ranking indicator provides a list of major research-oriented repositories arranged according to composite index derived from their web presence and the web impact (link visibility) of their contents, data obtained from the major commercial search engines.

References:

- Bjorneborn, Lennart& Ingwersen, Peter(2001). “Perspectives of webometrics.”Scientometrics, 50 (1), p.67.

- Thelwall, M. (2009). Introduction to Webometrics: Quantitative Web Research for the Social Sciences. San Rafael, CA: Morgan & Claypool Publishers. (Synthesis Lectures on Information Concepts, Retrieval, and Services).

Assistant Teacher at Zinzira Pir Mohammad Pilot School and College