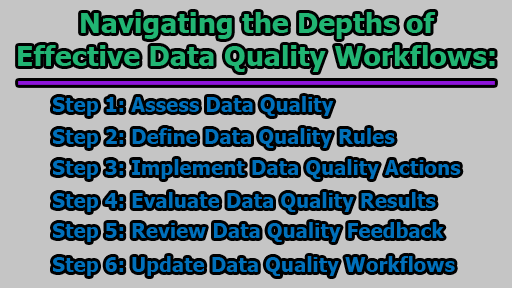

Navigating the Depths of Effective Data Quality Workflows:

In the fast-paced world of data-driven decision-making, the importance of high-quality data cannot be overstated. Data quality workflows play a pivotal role in ensuring the accuracy, completeness, consistency, and timeliness of data, forming the backbone of any robust data management strategy. In this article, we will explore navigating the depths of effective data quality workflows.

Step 1: Assess Data Quality: The initial step in any data quality workflow involves a comprehensive assessment of the current state of your data. This entails identifying sources, formats, types, and volumes of data, evaluating their alignment with business goals, and employing various methods and metrics for measurement. Techniques such as data profiling, auditing, validation, and data quality scorecards unveil potential issues like missing values, duplicates, outliers, inconsistencies, or errors, setting the foundation for subsequent improvements.

1.1 Identifying Sources, Formats, Types, and Volumes of Data:

- Sources: Begin by cataloging all the sources contributing to your data. This could include databases, spreadsheets, APIs, logs, and more.

- Formats: Understand the various formats your data comes in, such as CSV, Excel, JSON, databases, etc.

- Types: Categorize your data into types like structured, semi-structured, or unstructured, depending on the nature of the information.

- Volumes: Assess the scale of your data. This involves understanding the sheer amount of data you’re dealing with, whether it’s in gigabytes, terabytes, or more.

1.2 Alignment with Business Goals:

- Business Objectives: Evaluate how well the current state of the data aligns with the overarching goals of the business. Identify critical data elements that directly impact key performance indicators (KPIs) and business outcomes.

- Data Relevance: Ensure that the data being collected and processed is still relevant to the current business landscape and objectives.

1.3 Methods and Metrics for Measurement:

- Data Profiling: Employ data profiling techniques to create summaries and statistics about the structure, content, and quality of your data.

- Data Auditing: Conduct audits to verify the accuracy and completeness of your data. This involves comparing data against known standards or rules.

- Validation Techniques: Use various validation methods to check data integrity, including pattern matching, range checks, and consistency checks.

- Data Quality Scorecards: Develop scorecards that provide a snapshot of data quality, often based on predefined metrics. This can help in communicating the overall health of the data to stakeholders.

1.4 Uncovering Potential Issues:

- Missing Values: Identify and address instances where data fields have missing values, as these can impact analysis and decision-making.

- Duplicates: Locate and eliminate duplicate records to ensure that your analysis is based on unique and accurate information.

- Outliers: Identify and understand outliers that may skew analysis results. Decide whether these outliers are errors or legitimate data points.

- Inconsistencies and Errors: Scrutinize for inconsistencies in data formats, units, or naming conventions. Detect and rectify errors that may have occurred during data collection or entry.

1.5 Setting the Foundation for Improvements:

- Documentation: Document the findings of your assessment. This documentation will serve as a reference point for future data quality efforts.

- Prioritization: Prioritize issues based on their impact on business goals and outcomes. Not all issues may need immediate attention, so it’s crucial to focus on those that have the most significant impact.

- Communication: Communicate the findings and their implications to relevant stakeholders, including data owners, analysts, and decision-makers.

Step 2: Define Data Quality Rules: With a clear understanding of your data’s current state, the next step is to establish data quality rules that will guide subsequent cleaning and transformation processes. These rules serve as standards and criteria governing how data should look and behave. Relying on business logic, industry best practices, regulatory compliance, or user expectations, different types of rules, including accuracy, completeness, consistency, and timeliness, help set precise expectations and objectives for data quality improvement efforts.

2.1 Business Logic and Standards:

- Understand Business Requirements: Align data quality rules with the specific needs and requirements of the business. This involves engaging with stakeholders to comprehend their expectations regarding data accuracy, completeness, and other relevant dimensions.

- Industry Best Practices: Leverage industry-specific best practices to establish rules that reflect the norms and standards of your sector. This ensures that your data quality efforts are in line with recognized benchmarks.

2.2 Regulatory Compliance (Compliance Requirements): Identify and incorporate data quality rules that align with regulatory frameworks governing your industry. Compliance standards often dictate specific requirements for data accuracy, privacy, and security.

2.3 User Expectations (User-Centric Rules): Consider the expectations and needs of end-users when defining data quality rules. This ensures that the data is not only technically accurate but also relevant and useful for those who rely on it.

2.4 Types of Data Quality Rules:

- Accuracy Rules: Specify criteria for the correctness of data. This may include rules for numeric accuracy, proper formatting, and adherence to defined standards.

- Completeness Rules: Define standards for the entirety of data records. This involves ensuring that all necessary fields are filled, and no critical information is missing.

- Consistency Rules: Set guidelines for maintaining uniformity across datasets. This may involve rules for standardizing units of measurement, date formats, or naming conventions.

- Timeliness Rules: Establish expectations for the freshness of data. Timeliness rules may include requirements for regular updates or real-time data integration, depending on the business context.

2.5 Quantifiable Metrics (Measurable Criteria): Ensure that each rule is accompanied by quantifiable metrics that can be used to assess compliance. For example, accuracy rules may have a tolerance level for acceptable errors, and completeness rules may specify the expected percentage of filled fields.

2.6 Documentation: Document each data quality rule comprehensively. This documentation should include the rationale behind the rule, the specific conditions it addresses, and any associated metrics for measurement.

2.7 Validation and Testing:

- Validation Procedures: Develop procedures for validating data against these rules. This involves creating testing scenarios and methodologies to systematically evaluate data quality compliance.

- Automated Checks: Consider implementing automated checks to continuously monitor data quality. Automation helps in promptly identifying and rectifying issues as they arise.

2.8 Iterative Process (Iterative Refinement): Recognize that defining data quality rules is not a one-time task. As business requirements evolve or new insights are gained, revisit and refine the rules to ensure they remain relevant and effective.

Step 3: Implement Data Quality Actions: Execution of data quality actions is the crux of the workflow, focusing on correcting or preventing identified data issues. These actions involve tasks such as data cleansing, enrichment, standardization, deduplication, integration, and monitoring. Utilizing tools and techniques like data quality software, scripts, workflows, pipelines, or ETL processes helps automate and streamline these actions, ensuring consistency and reliability throughout the data lifecycle.

3.1 Data Cleansing:

- Identify and Correct Errors: Use data cleansing techniques to identify and rectify errors within the data. This could involve fixing inaccuracies, removing duplicate entries, and addressing inconsistencies.

- Normalization: Standardize data formats, units, and representations to ensure consistency. This may include converting dates to a uniform format, standardizing naming conventions, and normalizing data units.

3.2 Data Enrichment:

- Augmenting Information: Enhance the existing dataset by incorporating additional relevant information. This could involve appending missing details, such as geolocation data, demographic information, or additional attributes that contribute to a more comprehensive dataset.

- External Data Sources: Leverage external data sources to supplement and enrich your existing data. This might involve integrating data from third-party providers to enhance the accuracy and depth of your information.

3.3 Standardization:

- Establishing Consistency: Implement rules and processes to standardize data elements. This ensures uniformity in how data is represented, making it easier to analyze and interpret.

- Normalization: Normalize data by eliminating redundancies and inconsistencies, especially in cases where data is sourced from different systems with varying standards.

3.4 Deduplication:

- Identify and Remove Duplicates: Employ deduplication techniques to identify and eliminate redundant records within the dataset. This helps maintain a clean and concise dataset for analysis.

- Matching Algorithms: Utilize matching algorithms to identify potential duplicate entries based on predefined criteria, such as similar names, addresses, or other key identifiers.

3.5 Integration:

- Combine Disparate Data Sources: Integrate data from various sources to create a unified and comprehensive dataset. This involves combining information from different databases, systems, or files to provide a holistic view of the data.

- ETL Processes: Use Extract, Transform, Load (ETL) processes to seamlessly move and transform data from source systems to target systems, ensuring data consistency and quality throughout the integration process.

3.6 Monitoring:

- Continuous Surveillance: Implement continuous monitoring mechanisms to proactively identify and address data quality issues. This involves setting up alerts or triggers based on predefined thresholds or rules.

- Automated Monitoring Tools: Utilize data quality software and tools that offer automated monitoring features. These tools can help in real-time detection of anomalies and deviations from established data quality standards.

3.7 Automation:

- Automated Workflows and Pipelines: Design and implement automated workflows and data pipelines to streamline data quality actions. Automation not only reduces manual effort but also ensures consistency and repeatability in the data quality processes.

- Scheduled Processes: Schedule regular data quality checks and actions to ensure that the data remains accurate and reliable over time.

3.8 Documentation and Reporting:

- Documentation of Changes: Document all changes made during the data quality actions. This documentation is valuable for auditing purposes and provides transparency into the evolution of the data.

- Reporting Mechanisms: Establish reporting mechanisms to communicate the outcomes of data quality actions to relevant stakeholders. This could include dashboards, reports, or notifications summarizing the improvements achieved.

Step 4: Evaluate Data Quality Results: After implementing data quality actions, the next crucial step is to evaluate the results achieved. This involves measuring and comparing the before and after states of the data, assessing their alignment with data quality rules and goals. Utilizing methods such as data quality dashboards, reports, charts, or KPIs aids in visualizing and communicating data quality outcomes and impacts, identifying gaps, and highlighting areas for improvement.

4.1 Before-and-After Comparison:

- Baseline Assessment: Begin by comparing the current state of the data with the baseline assessment conducted earlier. This involves measuring key data quality metrics before any corrective actions were taken.

- Quantifiable Metrics: Utilize the quantifiable metrics associated with each data quality rule to assess the extent of improvement. This could include reduction in error rates, increased completeness percentages, or enhanced consistency scores.

4.2 Data Quality Dashboards and Reports:

- Visualization Tools: Implement data quality dashboards and reporting tools to provide a visual representation of data quality metrics. Dashboards can include charts, graphs, and other visualizations that make it easy to interpret the impact of data quality efforts.

- Comparative Analysis: Use these tools to conduct a comparative analysis between the initial state and the post-improvement state. This allows stakeholders to quickly grasp the effectiveness of the data quality actions.

4.3 Key Performance Indicators (KPIs):

- Define Data Quality KPIs: Establish key performance indicators that align with overarching business goals. These KPIs may include metrics like data accuracy rates, completeness percentages, or adherence to specific standards.

- Monitoring KPIs: Regularly monitor and update these KPIs to track the ongoing performance of data quality initiatives. This provides a continuous feedback loop for improvement.

4.4 Impact Assessment:

- Business Impact: Evaluate the impact of improved data quality on business processes and decision-making. Assess whether the enhanced data quality has led to more accurate insights, reduced errors, or improved operational efficiency.

- User Feedback: Gather feedback from end-users and data consumers to understand how the changes in data quality have influenced their experiences and outcomes.

4.5 Identifying Gaps and Areas for Improvement:

- Gap Analysis: Conduct a gap analysis to identify any lingering data quality issues or areas that did not experience the expected improvements. This analysis helps in refining data quality strategies and addressing persistent challenges.

- Iterative Refinement: Recognize that data quality is an ongoing process. If gaps or shortcomings are identified, refine data quality rules, actions, or monitoring processes accordingly.

4.6 Communication and Documentation:

- Stakeholder Communication: Communicate the results of the data quality evaluation to relevant stakeholders. This includes data owners, analysts, decision-makers, and any other teams impacted by the changes.

- Documentation of Outcomes: Document the outcomes of the evaluation, including any lessons learned, unexpected challenges, and strategies for further improvement. This documentation serves as a valuable resource for future data quality initiatives.

4.7 Continuous Improvement:

- Feedback Loop: Establish a feedback loop where insights from the evaluation process are used to continuously improve data quality strategies and processes.

- Iterative Cycle: View data quality improvement as an iterative cycle, with regular assessments and refinements based on evolving business needs and data landscape changes.

4.8 Training and Awareness:

- Training Programs: If necessary, provide training programs for teams involved in data quality management. This ensures that the lessons learned from the evaluation process are incorporated into future data quality activities.

- Cultivate Awareness: Foster a culture of data quality awareness within the organization, emphasizing the importance of maintaining high-quality data for effective decision-making and business success.

Step 5: Review Data Quality Feedback: Acknowledging the importance of end-user perspectives, the fifth step involves reviewing data quality feedback received from stakeholders and data users. Employing channels such as surveys, interviews, focus groups, or reviews helps collect opinions, comments, suggestions, or complaints. This qualitative data provides valuable insights into user needs, expectations, and satisfaction levels, unveiling potential issues or opportunities for enhancement.

5.1 Establishing Feedback Channels:

- Surveys: Design and distribute surveys to gather structured feedback from end-users. Include questions about their overall satisfaction with data quality, specific pain points, and suggestions for improvement.

- Interviews: Conduct one-on-one or group interviews with key stakeholders and users to gather in-depth insights. This approach allows for more detailed and nuanced feedback.

- Focus Groups: Organize focus group sessions where a diverse set of users can share their experiences, discuss common challenges, and provide collective feedback.

- Reviews and Comments: Encourage users to provide feedback through reviews, comments, or dedicated platforms where they can express their thoughts about the quality of the data they are working with.

5.2 Feedback Content:

- Satisfaction Levels: Assess overall satisfaction levels by asking users to rate their experience with data quality. Understand what aspects of data quality are meeting expectations and where there might be gaps.

- Identifying Pain Points: Prompt users to identify specific pain points or challenges they face in working with the data. This could include issues with accuracy, completeness, timeliness, or usability.

- Suggestions for Improvement: Encourage users to provide constructive suggestions for improving data quality. This could involve recommendations for additional data sources, enhanced validation checks, or better documentation.

5.3 Anonymity and Transparency:

- Anonymous Feedback: Allow users to provide feedback anonymously to encourage honest and candid responses. This can be especially important if users are concerned about potential repercussions for expressing their opinions.

- Transparency: Clearly communicate the purpose of collecting feedback and how it will be used. Transparency fosters trust and encourages open communication.

5.4 Analysis of Feedback:

- Thematic Analysis: Analyze the feedback to identify common themes and patterns. This involves categorizing responses to understand the most prevalent issues, concerns, or positive aspects.

- Quantitative Analysis: If applicable, quantify feedback using metrics or scales to measure the frequency and intensity of specific responses. This adds a quantitative dimension to the qualitative feedback.

5.5 Prioritization of Issues:

- Priority Ranking: Prioritize feedback based on its impact on end-users and business objectives. Some issues may have a more significant impact on data quality and user satisfaction than others.

- Critical Issues: Identify critical issues that require immediate attention. These could be issues that directly impact decision-making, operational efficiency, or compliance.

5.6 Communication with Stakeholders:

- Feedback Acknowledgment: Acknowledge the feedback received and communicate that it is being taken seriously. This acknowledgment demonstrates a commitment to addressing user concerns and improving data quality.

- Updates on Actions Taken: Keep stakeholders informed about the actions taken in response to the feedback. Regular communication ensures transparency and helps manage expectations.

5.7 Iterative Improvement:

- Continuous Feedback Loop: Establish a continuous feedback loop where user feedback is regularly collected and used to refine data quality processes. This iterative approach ensures that data quality efforts remain aligned with user needs over time.

- Adaptive Strategies: Use feedback to adapt data quality strategies and initiatives based on evolving user expectations and changing business requirements.

5.8 Training and Education:

- User Training: If feedback highlights user challenges related to understanding or using data, consider implementing training programs to empower users with the necessary skills.

- Educational Initiatives: Launch educational initiatives to raise awareness among users about the importance of data quality and how they can contribute to maintaining it.

Step 6: Update Data Quality Workflows: The final step in the data quality workflow is a continuous improvement process. Based on the evaluation of results and user feedback, necessary changes and adjustments are made to data sources, rules, actions, methods, tools, or metrics. Techniques like data quality audits, reviews, tests, or documentation are employed to maintain and enhance data quality workflows, ensuring their ongoing relevance and effectiveness.

6.1 Evaluation of Results:

- Assessment of Outcomes: Begin by assessing the outcomes of the implemented data quality actions. Evaluate the impact of these actions on key data quality metrics and performance indicators.

- Comparison with Goals: Compare the achieved results with the initially set goals and objectives. Identify areas where improvements met expectations and areas that require further attention.

6.2 User Feedback Integration:

- Incorporate User Feedback: Integrate insights from user feedback into the evaluation process. User perspectives can highlight nuances and challenges that might not be captured solely through quantitative metrics.

- Identify Opportunities for Enhancement: Use user feedback to identify specific opportunities for enhancing data quality workflows. This could include adjustments to rules, additional validation checks, or improvements in data documentation.

6.3 Data Quality Audits:

- Regular Audits: Conduct regular data quality audits to systematically review the state of data quality. Audits involve a thorough examination of data quality processes, rules, and outcomes.

- Identify Deviations: Identify any deviations from established data quality standards or rules during the audit process. This helps in pinpointing areas that may need adjustment.

6.4 Documentation Review:

- Review Documentation: Revisit and review the documentation associated with data quality workflows. Ensure that it accurately reflects the current state of rules, actions, and processes.

- Update Guidelines: Update guidelines and procedural documentation to reflect any changes or improvements made based on the evaluation results and user feedback.

6.5 Testing and Validation:

- Validation Testing: Conduct validation testing to ensure that data quality rules and actions are still effective in identifying and correcting issues.

- Scenario-based Testing: Implement scenario-based testing to simulate real-world conditions and assess how well the data quality workflows perform under different circumstances.

6.6 Tools and Technology:

- Technology Upgrades: Explore opportunities to upgrade or optimize the tools and technologies used in data quality workflows. New features or capabilities in data quality software, automation tools, or other technologies may offer enhanced functionality.

- Integration with Emerging Technologies: Consider integrating emerging technologies, such as machine learning or artificial intelligence, into data quality workflows to automate and improve decision-making processes.

6.7 Metrics Refinement: Refine the metrics used to measure data quality. If certain metrics did not adequately capture the nuances of data quality, consider introducing new metrics or adjusting existing ones for a more accurate representation.

6.8 Collaboration and Communication:

- Stakeholder Collaboration: Engage with stakeholders, including data owners, analysts, and end-users, to gather insights and collaborate on improvements. Leverage the collective expertise of the teams involved in data quality management.

- Communication of Changes: Clearly communicate any changes or updates to data quality workflows to all relevant stakeholders. Transparency in the update process fosters understanding and buy-in from the involved parties.

6.9 Training and Skill Development:

- Skill Enhancement: If the evaluation reveals gaps in the skills or knowledge of the teams involved in data quality management, consider implementing training programs to enhance their capabilities.

- Continuous Learning Culture: Foster a culture of continuous learning and improvement within the organization. Encourage teams to stay informed about new developments in data quality practices and technologies.

6.10 Iterative Improvement Cycle: Recognize that data quality improvement is an ongoing, iterative process. Regularly revisit and iterate on data quality workflows based on evolving business requirements, technological advancements, and changing data landscapes.

In conclusion, mastering data quality is an ongoing journey that requires a systematic and comprehensive approach. By following these six steps in your data quality workflows, you can establish a robust foundation for accurate, complete, consistent, and timely data, ultimately supporting informed decision-making and driving organizational success in the data-driven era.

Assistant Teacher at Zinzira Pir Mohammad Pilot School and College