How Data Engineers Turn Data Science into Useful Systems:

In the era of big data, the synergy between data science and data engineering plays a pivotal role in translating raw information into actionable insights. While data scientists are primarily responsible for extracting meaningful patterns and predictions from data, it is the role of data engineers to transform these insights into robust and scalable systems. In the rest of this article, we will explore how data engineers turn data science into useful systems.

Section 1: Understanding the Roles:

1.1 Data Scientists: Unraveling Patterns: Data science is an interdisciplinary field that leverages scientific methods, processes, algorithms, and systems to extract meaningful insights and knowledge from structured and unstructured data. At its core, the role of a data scientist is to uncover hidden patterns, trends, and correlations within vast datasets. This involves employing a combination of statistical analysis, machine learning, and domain expertise to derive actionable intelligence.

1.1.1 Responsibilities of Data Scientists:

- Data Exploration: Data scientists initiate the process by thoroughly exploring and understanding the dataset. This involves identifying potential variables, assessing data quality, and addressing missing values.

- Statistical Modeling: Employing statistical techniques, data scientists formulate hypotheses and build models to explain observed phenomena. This step is crucial for making predictions or uncovering relationships within the data.

- Machine Learning: Data scientists utilize machine learning algorithms to uncover complex patterns and predictions. This involves training models on historical data and applying them to new, unseen data for making accurate predictions.

1.1.2 Challenges in Translating Insights into Tangible Systems: While data scientists excel in unraveling intricate patterns, their role often concludes with the generation of insights on a conceptual level. One of the primary challenges they face is the transition from these conceptual insights to practical, implementable solutions. Data scientists may lack the expertise in engineering scalable and efficient systems to integrate their models into real-world applications.

Furthermore, the iterative nature of data science projects means that the models developed need to be deployed into production environments. This introduces challenges related to scalability, performance optimization, and ensuring that the models operate seamlessly in real-time.

1.2 Data Engineers (Architecting Solutions): Data engineers play a complementary role to data scientists, focusing on the development, construction, testing, and maintenance of the architecture that allows for the transformation of raw data into actionable insights. Their responsibilities span the entire data lifecycle, from collection and storage to processing and delivery.

1.2.1 Responsibilities of Data Engineers:

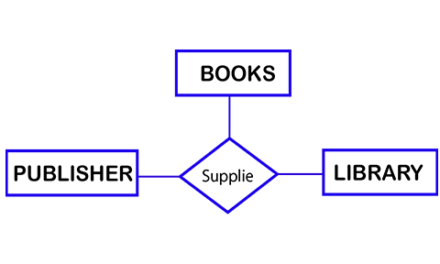

- Data Collection and Storage: Data engineers design and implement systems for efficient data collection, ensuring that data is acquired from diverse sources and stored in a structured manner. This involves making decisions regarding database architectures, data lakes, and ensuring data integrity.

- Data Processing and Transformation: The ETL (Extract, Transform, Load) process is a fundamental aspect of data engineering. Engineers work on transforming raw data into a usable format, making it accessible for analysis and modeling by data scientists.

- Infrastructure Design: Building scalable and reliable infrastructure is crucial for handling large volumes of data. Data engineers leverage various technologies and frameworks to create systems that can process and store data efficiently.

1.2.2 Challenges in Transforming Raw Insights into Functional Systems: Data engineers face challenges related to the complexity of data sources, changing data formats, and the need for real-time processing. Additionally, the integration of machine learning models developed by data scientists into production systems requires a seamless collaboration between the two roles. This collaboration is essential for ensuring that the models are integrated correctly, operate at scale, and adhere to industry standards.

Section 2: Building the Foundation:

2.1 Data Collection and Storage:

2.1.1 Importance of Efficient Data Collection: Efficient data collection is the cornerstone of any successful data science endeavor. Data engineers are tasked with designing and implementing systems that can gather data from diverse sources, such as databases, APIs, streaming platforms, and more. The challenge lies in not only collecting vast amounts of data but also ensuring its relevance and quality. This process involves considerations of data granularity, frequency, and the development of mechanisms to handle real-time data streams.

2.1.2 The Role of Data Engineers in Designing Data Storage Solutions: Once data is collected, it needs to be stored in a manner that allows for easy retrieval, analysis, and integration into downstream processes. Data engineers design and implement data storage solutions, ranging from traditional relational databases to distributed and scalable data lakes. The choice of storage technology depends on factors such as data volume, velocity, and the specific requirements of the data science and business teams.

2.1.3 Ensuring Data Quality and Integrity: Data quality is paramount in the data science pipeline. Data engineers implement mechanisms to ensure the quality and integrity of the data. This involves data cleaning, validation, and the establishment of data governance practices. By addressing issues such as missing values, outliers, and inconsistencies, data engineers contribute to the creation of a reliable foundation upon which data scientists can build their analyses and models.

2.1.4 Collaborative Efforts between Data Scientists and Engineers: The collaboration between data scientists and data engineers in the realm of data collection and storage is crucial for success. Data scientists provide insights into the specific data attributes required for their analyses, while data engineers implement the systems that collect, store, and make this data accessible. Regular communication between the two roles ensures that the data collected aligns with the analytical needs, preventing bottlenecks and discrepancies later in the data science workflow.

2.2 Data Processing and Transformation:

2.2.1 Introduction to ETL Processes: The ETL (Extract, Transform, Load) process is a fundamental component of data engineering, serving as the bridge between raw data and actionable insights. Data engineers design ETL pipelines to extract data from source systems, transform it into a suitable format, and load it into the target storage or analytical environment. This process ensures that data is cleansed, standardized, and ready for analysis by data scientists.

2.2.2 Tools and Technologies for Data Processing: A myriad of tools and technologies are available for data processing, and data engineers play a pivotal role in selecting the right ones for the task at hand. Batch processing frameworks like Apache Hadoop and Apache Spark are commonly used for handling large volumes of data, while stream processing systems like Apache Kafka enable real-time data processing. The choice of tools depends on factors such as data volume, latency requirements, and the complexity of transformations.

2.2.3 Collaborative Efforts between Data Scientists and Engineers in Data Transformation: Data scientists and engineers collaborate closely during the data transformation phase. While data scientists provide the logic and rules for transforming raw data into meaningful features, data engineers implement these transformations at scale. This collaborative effort ensures that the transformed data aligns with the analytical goals, and any adjustments needed during the iterative data science process are seamlessly integrated into the ETL pipelines.

Section 3: Integration of Machine Learning Models:

3.1 Model Deployment Challenges:

3.1.1 The Transition from Experimental Models to Production-Ready Systems: Data scientists excel in developing experimental models that showcase the predictive power of algorithms. However, deploying these models into real-world production environments poses a unique set of challenges. Data engineers play a pivotal role in transitioning these models from experimentation to systems that can handle the complexities of continuous, real-time operation.

3.1.2 Challenges in Deploying Machine Learning Models at Scale: Scaling machine learning models to meet the demands of production environments involves considerations beyond algorithmic accuracy. Data engineers face challenges related to resource optimization, model monitoring, and maintaining the integrity of predictions over time. The deployment process requires collaboration between data scientists, who understand the intricacies of the models, and data engineers, who are responsible for integrating them into scalable and efficient systems.

3.1.3 The Need for Collaboration between Data Scientists and Engineers in Model Deployment: Successful model deployment is contingent on a seamless collaboration between data scientists and engineers. Data scientists provide insights into the nuances of the models they have developed, including considerations for input data formats, model dependencies, and potential issues that may arise in real-world scenarios. Data engineers, on the other hand, leverage their expertise in system architecture and software engineering to integrate these models into the broader technology stack.

3.2 Scalability and Performance Optimization:

3.2.1 Techniques for Optimizing Machine Learning Models for Scalability: Scalability is a critical factor when deploying machine learning models in production. Data engineers employ various techniques to ensure that models can handle increasing workloads. This may involve parallelizing computations, utilizing distributed computing frameworks, and optimizing algorithms for efficiency. The goal is to ensure that the models can scale horizontally to accommodate growing data volumes and user demands.

3.2.2 The Role of Data Engineers in Building Systems that Handle Large-Scale Data Processing: Large-scale data processing is inherent in deploying machine learning models, especially in scenarios where real-time predictions or batch processing of massive datasets is required. Data engineers design and implement systems that can handle the computational and storage demands of these processes. This may involve the use of cloud-based infrastructure, containerization, and other technologies that enable elastic scaling based on demand.

3.2.3 Real-World Examples of Successful Collaboration between Data Scientists and Engineers in Scaling Models: Illustrating the success stories of collaboration between data scientists and engineers in deploying scalable machine learning models provides valuable insights. Case studies from industries such as finance, healthcare, and e-commerce demonstrate how a collaborative approach led to the successful implementation of models at scale, resulting in improved decision-making processes and business outcomes.

Section 4: Implementing Real-Time Analytics:

4.1 Real-Time Data Processing:

4.1.1 The Importance of Real-Time Analytics: In today’s fast-paced business environment, the need for real-time insights has become increasingly crucial. Real-time analytics enables organizations to make informed decisions on the fly, respond rapidly to changing conditions, and gain a competitive edge. Data engineers play a pivotal role in establishing the infrastructure necessary for processing and analyzing data in real-time.

4.1.2 Tools and Technologies for Real-Time Data Processing: Several tools and technologies facilitate real-time data processing. Streaming frameworks like Apache Kafka, Apache Flink, and Apache Storm enable the processing of data as it arrives. These frameworks allow for the continuous analysis of data streams, making it possible to derive insights in real-time. Data engineers evaluate the requirements of the use case and choose the most suitable tools to build scalable and efficient real-time data processing systems.

4.1.3 Data Engineering Strategies for Handling Real-Time Data Streams: Handling real-time data streams requires a different set of strategies compared to batch processing. Data engineers design systems that can ingest, process, and analyze data on the fly, ensuring low latency and high throughput. Techniques such as micro-batching and event-driven architectures are employed to handle continuous streams of data. Collaborating with data scientists, data engineers integrate real-time analytics into the broader data architecture, creating a seamless flow from data ingestion to actionable insights.

4.2 Collaborative Approach to Real-Time Insights:

4.2.1 Integrating Real-Time Insights into Decision-Making Processes: Real-time insights are most valuable when they are seamlessly integrated into the decision-making processes of an organization. Data scientists and engineers collaborate to ensure that the models and analyses developed for real-time scenarios align with the decision needs of stakeholders. This collaborative approach requires a deep understanding of the business context, the specific requirements of decision-makers, and the technical constraints of real-time data processing.

4.2.2 Examples of Successful Implementation of Real-Time Analytics: Examining real-world examples where organizations successfully implemented real-time analytics provides valuable insights. Industries such as retail, telecommunications, and cybersecurity showcase instances where the integration of real-time insights transformed operational efficiency, enhanced customer experiences, and enabled proactive decision-making. Understanding these examples helps highlight the impact of collaboration between data scientists and engineers in implementing practical real-time solutions.

4.2.3 Challenges and Solutions in Building Systems Supporting Real-Time Data Analysis: Despite the advantages of real-time analytics, challenges such as data consistency, processing bottlenecks, and system reliability need to be addressed. Data engineers work closely with data scientists to identify and mitigate these challenges. They implement fault-tolerant systems, design efficient algorithms for real-time processing, and ensure that the insights generated are accurate and timely. This collaborative effort is essential for building robust systems that support real-time data analysis in diverse industry contexts.

Section 5: Ensuring Data Security and Compliance:

5.1 Data Governance and Compliance:

5.1.1 The Significance of Data Governance in Data Science and Engineering: Data governance forms the backbone of responsible and effective data management. Data engineers and data scientists collaborate to establish robust data governance practices that encompass data quality, privacy, security, and compliance. Data governance ensures that data is treated as a valuable organizational asset and is handled in a manner consistent with regulatory requirements and internal policies.

5.1.2 Challenges in Ensuring Data Security and Compliance with Regulations: Data security and compliance with regulations such as GDPR, HIPAA, or industry-specific standards are critical considerations in data science and engineering. Data engineers are tasked with implementing security measures to protect sensitive information, ensuring that access controls are in place, and encryption is applied where necessary. Additionally, they collaborate with legal and compliance teams to navigate the complex landscape of data regulations and implement solutions that align with these requirements.

5.1.3 Collaboration between Data Engineers and Data Scientists to Meet Compliance Requirements: Data scientists contribute to compliance efforts by understanding the legal and ethical implications of their analyses. Collaborating with data engineers, they ensure that the models and systems developed adhere to the principles of data governance and compliance. This collaboration is essential for creating a data environment where the ethical use of data is not an afterthought but an integral part of the entire data lifecycle.

5.2 Ethical Considerations in Data Handling:

5.2.1 Addressing Ethical Concerns Related to Data Collection and Processing: The rapid growth of data-driven technologies has raised ethical concerns regarding the collection, handling, and use of data. Data scientists and engineers engage in discussions on the ethical implications of their work, particularly concerning issues such as bias, fairness, and privacy. This involves acknowledging the potential societal impact of their analyses and making conscious decisions to mitigate any unintended consequences.

5.2.2 The Role of Data Engineers in Building Ethical Data Systems: Data engineers play a critical role in building systems that adhere to ethical standards. This involves implementing mechanisms to detect and mitigate bias in algorithms, ensuring that privacy is maintained, and providing transparency in data processing. By incorporating ethical considerations into the design and implementation of data systems, data engineers contribute to the creation of responsible and trustworthy data environments.

5.2.3 Strategies for Maintaining Transparency and Accountability in Data-Driven Processes: Transparency is key to building trust in data-driven processes. Data engineers work alongside data scientists to implement strategies for maintaining transparency, such as documenting data sources, model architectures, and decision-making processes. Additionally, they establish accountability mechanisms to track and trace the impact of data-driven decisions, allowing organizations to address issues promptly and responsibly.

In conclusion, the symbiotic relationship between data scientists and data engineers is indispensable in turning data science into functional systems. From building the foundational infrastructure to deploying machine learning models at scale, data engineers play a crucial role in transforming insights into actionable solutions. As we navigate the evolving landscape of big data, the collaboration between these two roles becomes increasingly vital, ensuring that the full potential of data science is harnessed for the benefit of businesses and society as a whole.

Library Lecturer at Nurul Amin Degree College