Role of bibliometrics in institutional evaluation

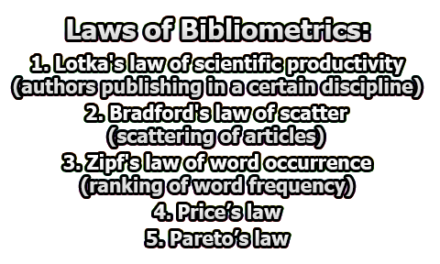

The term “Bibliometrics” was first introduced in 1969 as a substitute for statistical bibliography used up until that time to describe the field of study concerned with the application of mathematical models and statistics to research and quantify the process of written communication. Evaluative bibliometrics is a term coined in the seventies to denote the use of bibliometric techniques, especially publication and citation analysis, in the assessment of scientific activity. In the rest of this article, we are going to know the “role of bibliometrics in institutional evaluation”.

In many countries stagnating expenditure on higher education coupled with a growing intake of students in many universities, limit the possibilities for research funding. Furthermore, a growing culture of accountability in research environments is forcing scientists and teachers to become more and more productive. Funds are assigned according to performance. Research evaluation and research excellence are bywords in today’s academic climate. Traditionally, the assessment of scientific research has been limited to peer review during the grant-awarding process or during evaluations for promotion or tenure. At present bibliometric techniques are increasingly used as an intrinsic component of a wide range of evaluation exercises.

The ability of publication and citation analysis to encompass different levels of aggregation makes it a technique ideally suited to national and institutional studies. Nonetheless, literature-based indicators are appropriate only for institutional settings that reward publication and only for those activities that produce written knowledge. The fact that the role of written knowledge is influenced by cultural and socio-economic aspects, as well as cognitive determinants that vary between fields of science and between different institutional settings, is considered their main theoretical constraint. Some institutions, for example, recompense behavior that reinforces the reward system of the international scientific community with their own internal reward structures. Others may set their own standards and goals.

Some indicators established globally for the evaluation of scientific performance might not be adequate for a fair or realistic assessment of certain research scenarios. Scientific output indicators based on mainstream publication in international journals should not be taken as the only bibliometric indicator for the evaluation of applied research in developing countries where publication in national journals in the local language is the norm. Disciplinary considerations are paramount. For researchers in the social sciences and humanities, monographs and books are important dissemination channels for research results. Technological research results are published mainly in congress proceedings, reports, and patents, and are better represented in this type of gray literature than in mainstream journals. The output of technological and innovation research, in many cases, is not written up as such but appears as designs, applications, models, or know-how. In these instances, literature-based indicators, clearly, have little meaning.

An important consideration in any exercise of institutional evaluation is that results and recommendations to policymakers should have the general acceptance of the researchers concerned. Consequently, scientists and research managers should be included in the team responsible for the planning, execution, and analysis of the research activity. Without the involvement of these key players, the evaluation exercise is unlikely to receive validation from the other members of the research community.

Institutional evaluation should be a continuous process. Ideally, procedures should be in place for the systematic monitoring of research performance and other fundamental scholarly activities. To accomplish this, institutions should develop their own data system and make it available through the local intranet. In this way, information is continually available for consultation by academic staff and other internal users, as well as for providing the raw data for the periodic generation of bibliometric and other scientometric indicators required for evaluation exercises. In practice, most evaluations are focused on the short-term, often covering only three or four years. This is understandable otherwise results in span too long a period for them to be useful for science managers. Nonetheless, their ultimate value can be measured only over the medium and long term.

In institutional evaluation exercises, scientific output and impact are related to input measures, such as research expenditure and the number and categories of academic staff. When carrying out comparative studies, other factors are considered, such as differences in the institutional academic and administrative structures, educational models, etc. Consequently, before deciding upon the procedure for collecting bibliometric data it is necessary to consider the internal institutional research structure. While research administration of many universities follows the traditional departmental structure, the increase in multi and interdisciplinary research, often organized in a program structure, has given rise to research groups formed by members of different departments. Research groups, rather than individual scientists, are today targeted for the allocation of research funds. For this reason, the research group is the most common unit for bibliometric analysis in institutional evaluations. This in turn has produced a wave of interest in scientometric research focusing on the identification of research groups by coauthor analysis and its corroboration by expert opinion. Notwithstanding, the research performance of any aggregate of scientists can be assessed using bibliometrics. This aggregate is often termed a “unit” which can be taken to represent any given set or sets of scientists depending upon the objective of the evaluation.

While no absolute quantification of research performance is possible, valid and useful comparisons can be made between research groups working in the same fields. When making comparisons between groups it is essential to apply indicators to matched groups, comparing like with like, as far as possible, and to give careful thought to what the various indicators are actually measuring. It is also important to study not only the similarities between groups but also the differences, especially those that could be directly influencing the research performance.

Reference:

Russell, J.M., & Rousseau, R. (2001). BIBLIOMETRICS AND INSTITUTIONAL EVALUATION.

Assistant Teacher at Zinzira Pir Mohammad Pilot School and College